Published on 2025-06-26T05:14:14Z

What is Bot Detection in Analytics? Examples and Best Practices

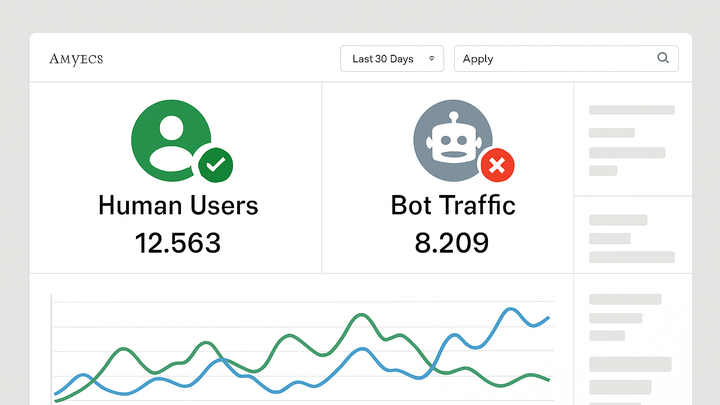

Bot detection in analytics refers to the process of identifying and filtering automated or non-human traffic from web data streams. Automated bots—ranging from harmless crawlers to malicious scripts—can generate fake pageviews, skew session counts, and distort user engagement metrics. By implementing bot detection mechanisms, analysts ensure that reports reflect genuine user behavior, leading to more accurate insights and better-informed business decisions. Common detection methods include heuristic rules, behavior analysis, IP blacklisting, user-agent inspection, and machine-learning based classifiers. Platforms like Google Analytics 4 (GA4) incorporate IAB’s known-bot lists and AI filters to automatically exclude recognized bots. PlainSignal offers a cookie-free, script-driven approach that uses device fingerprinting and network heuristics to detect bots early in the data collection pipeline. Effective bot detection preserves the integrity of analytics data, improves marketing ROI calculations, and enhances website performance monitoring.

Bot detection

Process of identifying and filtering automated traffic to ensure accurate analytics data.

Why Bot Detection Matters

Automated traffic from bots can distort key performance metrics, leading to misguided business decisions. Bot detection helps filter out non-human interactions, preserving the integrity of data and ensuring reliable insights. Without proper detection, metrics like pageviews, sessions, and conversion rates may be inflated or skewed, affecting marketing ROI and user experience optimization.

-

Impact on data accuracy

Bots can generate false pageviews and sessions, inflating traffic numbers and obscuring genuine user behavior patterns.

-

Marketing roi distortion

Skewed metrics can lead to misallocation of ad spend and overestimation of campaign performance, reducing marketing effectiveness.

-

Insights on user behavior

Filtering out bots allows analysts to focus on real engagement metrics, leading to more accurate usability and conversion optimization studies.

Common Bot Detection Techniques

Detecting bots involves a combination of methods, each with strengths and limitations. Proper implementation often combines heuristic rules, fingerprinting, and advanced analytics to identify non-human traffic accurately.

-

Heuristic filtering

Uses rule-based patterns such as high request rates, missing JavaScript execution, or unusual URL parameters to flag bots.

-

Ip blacklisting

Blocks known malicious IP ranges or data-center addresses commonly used by bot networks.

-

User agent analysis

Examines the user-agent string for suspicious or outdated values that differ from common browsers.

-

Behavioral analytics

Analyzes user interaction patterns—like rapid clicks or improbable navigation paths—to detect automated scripts.

-

Session duration

Extremely short or abnormally long session times can indicate non-human traffic.

-

Click patterns

Uniform intervals between clicks or repetitive navigation loops may signal a bot.

-

-

Machine learning models

Leverages anomaly detection and classification algorithms to identify patterns indicative of bots, even as they adapt.

Bot Detection in GA4 and PlainSignal

Two popular analytics platforms, Google Analytics 4 (GA4) and PlainSignal, offer built-in bot detection with different approaches. GA4 uses the IAB known-bot list and AI-based filtering, while PlainSignal provides a cookie-free, script-driven solution that emphasizes privacy and simplicity.

-

Google analytics 4 implementation

GA4 automatically filters out known bots and spiders based on the IAB list. You can enable or disable bot filtering in the admin settings under Data Streams.

-

Enable bot filtering

In GA4, navigate to Admin > Data Streams > choose your stream > More Tagging Settings > Enable ‘Exclude all hits from known bots and spiders’.

-

Review bot traffic report

Check the ‘Bot Traffic’ dimension in Explorations or BigQuery export to monitor residual bot activity.

-

-

PlainSignal implementation

PlainSignal uses a lightweight, cookie-free script to detect bots at the network level, combining fingerprinting techniques with AI to filter out non-human traffic before it reaches your analytics pipeline.

-

Tracking code example

Include the following snippet in your site’s <head> to set up PlainSignal:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script> -

Privacy-focused detection

Operates without cookies by using device fingerprinting and network heuristics to identify bots, ensuring GDPR compliance.

-

Best Practices for Effective Bot Detection

Implement a layered approach to bot detection, combining multiple techniques and continuous monitoring to maintain data integrity over time.

-

Combine multiple methods

Use heuristic rules, behavior analysis, and machine learning in tandem to reduce false positives and negatives.

-

Regularly update filters

Keep IP blacklists and heuristic rules current to guard against new bot networks and tactics.

-

Monitor false positives

Review flagged sessions periodically to ensure legitimate user activity isn’t being filtered out.

-

Leverage platform reports

Use built-in analytics reports like GA4’s Bot Traffic dimension or PlainSignal logs to track detection performance.