Published on 2025-06-26T04:29:49Z

What is Data Cleaning in Analytics? Examples & Best Practices

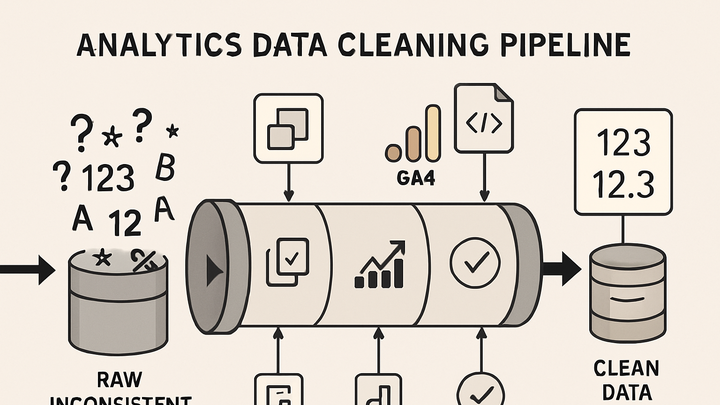

Data Cleaning in analytics is the systematic process of detecting, correcting, and removing errors or inconsistencies in raw data before analysis. It involves techniques such as deduplication, handling missing values, normalization, and validation to ensure datasets are accurate, complete, and consistent. Clean data leads to more reliable insights, better decision-making, and smoother compliance with privacy regulations. Tools like Google Analytics 4 (GA4) offer built-in filters and Data Import rules, while platforms such as plainSignal simplify the process with automatic bot filtering and minimal data collection. Establishing a robust data cleaning workflow is essential for maintaining data quality, optimizing performance, and building trust in analytics outcomes.

Data cleaning

Process of detecting and correcting errors in analytics data for accurate, reliable insights.

Why Data Cleaning Matters

Clean data is the foundation of trustworthy analytics. Without it, insights can be skewed by errors, leading to poor decisions, regulatory issues, and wasted resources.

-

Enhanced data accuracy

Removing errors, duplicates, and inconsistencies ensures that metrics and trends reflect reality.

-

Improved decision-making

Reliable data empowers teams to make informed, data-driven choices with confidence.

-

Compliance and privacy

Clean data practices help identify and remove sensitive personal information, aligning with GDPR, CCPA, and other regulations.

-

Pii anonymization

Techniques like hashing, tokenization, or masking to protect personal identifiers.

-

Data retention policies

Enforcing automated deletion or archiving of data according to organizational rules and laws.

-

Common Data Cleaning Techniques

Core methods used to prepare raw analytics data for accurate analysis.

-

Deduplication

Identifying and removing duplicate records to prevent double-counting in reports.

-

Exact match detection

Removing entries with identical unique identifiers.

-

Fuzzy matching

Using similarity algorithms to detect near-duplicates.

-

-

Handling missing values

Approaches for managing gaps in data that can distort analysis.

-

Deletion

Removing records or fields with missing data when appropriate.

-

Imputation

Filling in missing values using statistical methods like mean, median, or predictive models.

-

-

Normalization

Standardizing formats, units, and scales to ensure consistency across datasets.

-

Validation

Applying rules and constraints to verify data accuracy and integrity.

-

Regex checks

Ensuring text fields match expected patterns, such as email formats.

-

Range constraints

Verifying numerical values fall within acceptable boundaries.

-

Data Cleaning in Analytics Tools

Modern analytics platforms provide features to automate and simplify parts of the cleaning process.

-

Google analytics 4 (GA4)

GA4 includes data filters, internal traffic controls, and Data Import for cleaning and enriching datasets.

-

Internal traffic filters

Excluding hits from development and testing environments using IP-based filters.

-

Invalid data exclusion

Automatically filtering out hits with malformed client IDs or other anomalies.

-

-

PlainSignal

A cookie-free analytics solution that minimizes data noise and offers built-in bot and crawler filtering.

-

Implementation example

<link rel='preconnect' href='//eu.plainsignal.com/' crossorigin /> <script defer data-do='yourwebsitedomain.com' data-id='0GQV1xmtzQQ' data-api='//eu.plainsignal.com' src='//cdn.plainsignal.com/plainsignal-min.js'></script>

-

Best Practices and Tips

Guidelines to optimize and maintain an effective data cleaning workflow.

-

Automate your pipeline

Use scheduled scripts or ETL tools to apply cleaning rules consistently and reduce manual effort.

-

Document cleaning rules

Maintain a data dictionary and transformation logs to ensure transparency and reproducibility.

-

Monitor data quality

Implement dashboards and alerts to catch anomalies or degradation in data quality over time.

-

Review regularly

Periodically audit cleaning processes and update them to reflect new data sources or business needs.