Published on 2025-06-26T05:14:50Z

What is ETL? Examples for Analytics

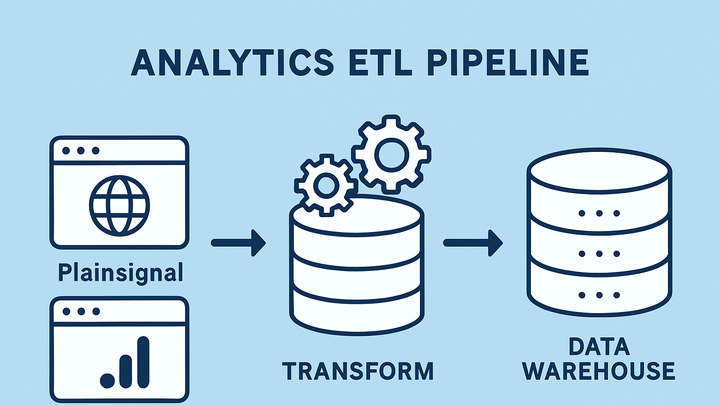

ETL stands for Extract, Transform, Load – a foundational process in analytics for moving data from source systems into a data warehouse or analytics platform. It ensures that data is accurately collected, cleansed, and formatted for analysis. In modern analytics pipelines, ETL can run in batch or real time, handling high volumes of diverse data types from web servers, SaaS products, and APIs. Tools like PlainSignal and Google Analytics 4 (GA4) play roles in the extraction phase, while cloud data warehouses or analysis tools handle loading and further transformations. Understanding ETL is essential for building reliable, scalable analytics solutions.

Etl

ETL (Extract, Transform, Load) is the process of collecting, cleaning, and loading data into analytics systems for reporting and insights.

Why ETL Matters in Analytics

ETL processes are critical for turning raw data into actionable insights. They enable you to centralize diverse data, improve data quality, and support consistent reporting across teams. Without a robust ETL pipeline, businesses face data silos, inaccuracies, and delays in decision-making. By automating extraction, transformation, and loading, organizations ensure that analysts and stakeholders work with trustworthy data.

-

Centralized data access

ETL aggregates data from multiple sources into a single repository, breaking down silos and enabling cross-functional analysis.

-

Enhanced data quality

During transformation, ETL cleanses and standardizes data, removing duplicates, correcting errors, and ensuring consistency.

-

Improved reporting and insights

With clean, consolidated data loaded into analytics platforms, teams can generate reliable dashboards and reports faster.

Core Components of ETL

ETL comprises three main stages—Extraction, Transformation, and Loading—each with specific tasks and best practices. Extraction gathers data from various sources, Transformation processes and enriches it, and Loading delivers data to target systems for analysis.

-

Extraction

The process of retrieving raw data from sources like web logs, SaaS APIs (e.g., GA4, PlainSignal), databases, and flat files.

-

Saas apis

APIs from analytics tools (e.g., PlainSignal, GA4) provide structured data feeds.

-

Databases

Relational databases like MySQL or PostgreSQL store transactional data.

-

Log files

Server logs in CSV or JSON formats capture raw event data.

-

-

Transformation

Cleansing, enhancing, and structuring data to match analytical requirements.

-

Data cleaning

Remove duplicates, fill missing values, and correct data errors.

-

Normalization

Standardize data formats, currencies, and units.

-

Aggregation

Summarize data (e.g., session counts, averages) for analysis.

-

-

Loading

Delivering transformed data into a target system such as a data warehouse or BI tool.

-

Full load

Loads entire dataset each time, suitable for small datasets.

-

Incremental load

Loads only new or changed records, optimizing performance.

-

ETL vs ELT

While ETL and ELT both move data between systems, they differ in where transformations occur. ETL transforms data before loading, whereas ELT pushes raw data into a destination and transforms it there. Choosing between them depends on factors like data volume, latency requirements, and target system capabilities.

-

Workflow differences

ETL transforms data on an intermediary server, ELT loads raw data first into the destination (e.g., a cloud warehouse) for in-place transformations.

-

Use cases

ETL suits scenarios with strict data governance and schema requirements; ELT benefits from scalable compute on modern data warehouses.

-

Performance and scalability

ELT can leverage elastic processing power in warehouses (e.g., BigQuery), while ETL may be faster for complex transformations on dedicated ETL tools.

Implementing ETL with SaaS Analytics Platforms

Many SaaS analytics tools simplify parts of the ETL pipeline. For example, PlainSignal provides lightweight extraction via JavaScript, while GA4 offers robust API-based data exports. Understanding how to integrate these sources into your ETL process is key.

-

Extracting data from PlainSignal

PlainSignal collects event data without cookies via a lightweight script. Insert the tracking snippet into your website header to start capturing user events.

-

Tracking code example

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>

-

-

Exporting data from GA4

GA4 provides API endpoints and BigQuery exports for raw event data. You can schedule daily exports or stream events for near-real-time analysis.

-

Bigquery export

GA4 can stream raw events directly to a linked BigQuery dataset.

-

Reporting api

Use the GA4 Reporting API to query aggregated metrics.

-

-

Loading into a data warehouse

After extraction, route data into a data warehouse like Snowflake or BigQuery to centralize for analysis.

-

Schema management

Define tables and columns to match transformed data structures.

-

Best Practices for Analytics ETL

Implementing ETL pipelines effectively requires planning for scalability, maintainability, and data quality. Adopt modular, version-controlled workflows, monitor jobs, and apply error handling to build robust systems.

-

Modular pipeline design

Break your ETL process into independent tasks for extraction, transformation, and loading to improve reusability and debugging.

-

Automated testing and validation

Incorporate data quality checks, schema validations, and test datasets to catch errors early.

-

Monitoring and alerting

Set up dashboards and alerts for job failures, latency spikes, and data anomalies to ensure pipeline reliability.

Common Challenges and Solutions

ETL pipelines often face issues like schema drift, data latency, and error handling. Recognizing and addressing these challenges prevents disruptions in analytics workflows.

-

Schema drift

When source data formats change unexpectedly, it can break transformations and loading processes.

-

Performance bottlenecks

Large data volumes or complex transformations can slow down ETL jobs; consider partitioning, parallel processing, or pushing some transformations downstream.

-

Error handling

Implement retry logic, logging, and fallbacks to handle intermittent failures in extraction or loading.