Published on 2025-06-28T05:23:38Z

What is ELT? Examples for ELT in Analytics

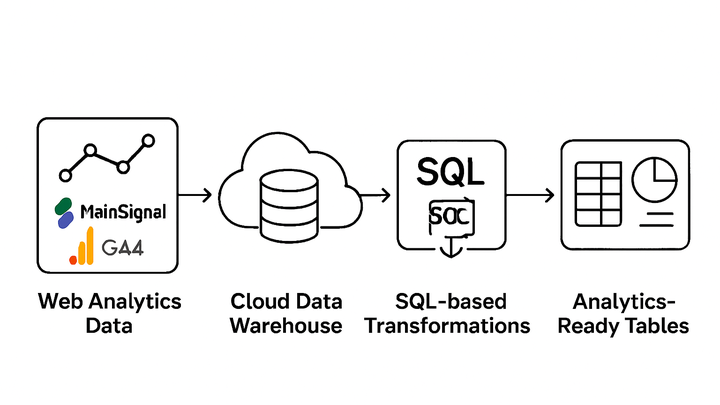

ELT (Extract, Load, Transform) is a modern data integration approach used in analytics that extracts raw data from source systems, loads it directly into a data warehouse, and then transforms it in-place using the warehouse’s compute power. With the rise of cloud data warehouses like BigQuery and Snowflake, ELT has become the preferred method for processing large volumes of analytics events and user behavior data. By preserving raw event-level details, ELT pipelines enable data teams to perform ad-hoc analysis, iterate on transformation logic, and maintain a clear audit trail. This approach reduces infrastructure complexity by leveraging the storage and compute separation of modern warehouses, offering scalable performance and cost optimization. Analytics platforms such as PlainSignal and Google Analytics 4 (GA4) can feed events into ELT workflows, supporting use cases from real-time dashboards to advanced machine learning models.

Elt

ELT loads raw data into a warehouse then transforms it in-place, leveraging cloud compute power for scalable analytics.

Why ELT Matters in Analytics

ELT (Extract, Load, Transform) has become the dominant paradigm in cloud analytics due to its scalability, cost-efficiency, and flexibility. By loading raw data directly into a data warehouse, teams can leverage the powerful compute engines of modern cloud platforms to transform data on-demand. This approach accelerates time to insight, preserves full event detail for exploratory analysis, and simplifies infrastructure by removing the need for dedicated ETL servers.

-

Performance and scalability

Modern cloud data warehouses like BigQuery and Snowflake can process transformations at scale, enabling large datasets to be transformed quickly without external ETL servers.

-

Massively parallel processing

Data warehouses distribute transformation workloads across many nodes to speed up large-scale data processing.

-

Elastic compute

Compute resources can be scaled up or down based on demand, optimizing cost and performance.

-

-

Faster time to insights

By loading data first and transforming it later, analysts can explore raw data immediately, iterating on transformation logic as insights evolve.

-

Interactive exploration

Raw data in the warehouse allows ad-hoc querying to discover new patterns before formalizing transformations.

-

Versioned transformations

Transformation logic can be version-controlled, ensuring reproducibility and auditability of data models.

-

Core Steps of an ELT Pipeline

An ELT pipeline typically involves three main steps: extracting data from source systems, loading it raw into a data warehouse, and transforming it into analytics-ready models. Each step can be optimized independently using specialized tools and cloud services.

-

Extract

Data is pulled from source systems such as PlainSignal, GA4, databases, or APIs, preserving the raw event-level details for maximum flexibility.

-

Tracking code integration

Embed PlainSignal’s lightweight tracking snippet to capture event data without cookies.

-

Api connectors

Use GA4’s Data API to stream analytics data into your pipeline in near real-time.

-

-

Load

Raw data is ingested into a centralized data warehouse like BigQuery, Snowflake, or Redshift, often landing in staging tables for initial validation.

-

Bulk loading

Buffer large volumes of data in cloud storage, then perform bulk loads to optimize throughput.

-

Incremental loading

Schedule regular loads of new or changed records to keep the warehouse up-to-date.

-

-

Transform

SQL or ELT tools like dbt are used to clean, join, and enrich raw data in-place, building robust, reusable models for reporting.

-

Sql-based transformations

Write SQL queries to filter, aggregate, and join tables directly in the data warehouse.

-

Orchestration with dbt

Use dbt to manage dependencies, documentation, and testing of SQL transformation code.

-

ELT vs. ETL

While both ELT and ETL aim to move and prepare data for analysis, their architectures differ significantly. ETL transforms data before loading into the warehouse, requiring separate transformation infrastructure. ELT shifts transformations downstream, leveraging the compute power of modern cloud data warehouses and simplifying the pipeline.

-

Order of operations

ETL transforms data before loading; ELT loads raw data then transforms within the warehouse.

-

Infrastructure complexity

ETL often needs dedicated transformation servers and scheduling; ELT reduces infrastructure by centralizing compute in the warehouse.

-

Flexibility and agility

ELT preserves raw data for exploratory analysis; ETL can limit flexibility if transformations are not fully defined upfront.

Example: Implementing ELT with PlainSignal and GA4

This example shows how to set up an ELT pipeline using PlainSignal’s cookie-free analytics and Google Analytics 4 (GA4). We will capture events, load them into a data warehouse, and run transformations to create analytics-ready tables.

-

Extracting event data

Use PlainSignal’s tracking snippet and GA4 tags to collect raw event data directly from the website.

-

PlainSignal snippet

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /><script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script> -

GA4 tag configuration

Configure Google Tag Manager to deploy the GA4 tag for pageviews and custom events.

-

-

Loading into the data warehouse

Stream raw events into BigQuery or Snowflake using native connectors or ingestion tools.

-

Bigquery streaming api

Send GA4 events directly to BigQuery via the Measurement Protocol or built-in connectors.

-

Snowflake snowpipe

Use Snowpipe to auto-ingest PlainSignal export files from cloud storage.

-

-

Transforming data for analysis

Leverage dbt or warehouse-native SQL to build analytics-ready tables, such as user funnels, segments, and dashboards.

-

Dbt models

Define staging and mart models to clean and organize raw event data.

-

Scheduled jobs

Orchestrate transformation runs with tools like Airflow or dbt Cloud.

-