Published on 2025-06-26T04:40:33Z

What is Entity Recognition? Examples in PlainSignal and GA4

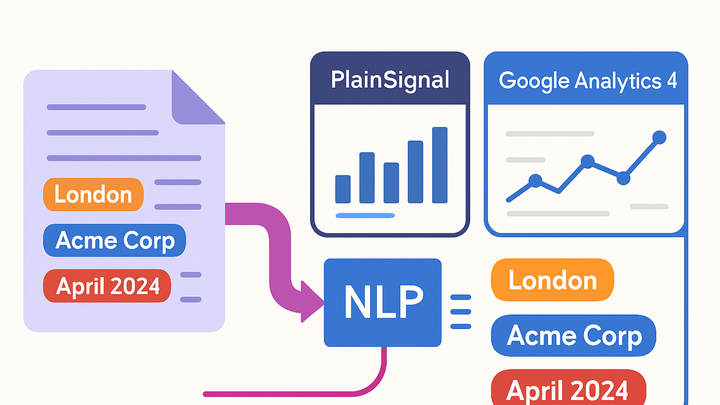

Entity Recognition, also known as Named Entity Recognition (NER), is a natural language processing (NLP) technique that automatically identifies and classifies key elements—such as names of people, locations, organizations, products, and more—within unstructured text data. In analytics, it transforms free-form text from customer feedback, chat logs, social posts, and other sources into structured fields that can be measured, segmented, and analyzed.

By extracting entities, analytics teams can enrich event tracking pipelines, create dynamic user segments (for example, users mentioning a specific product), and uncover deeper insights beyond basic metrics. PlainSignal (a cookie-free simple analytics solution) can ingest entity tags as custom event parameters, while Google Analytics 4 (GA4) can leverage its BigQuery export and Google Cloud NLP API to annotate and report on recognized entities. This glossary article covers the fundamentals, implementation steps in both PlainSignal and GA4, real-world use cases, and best practices for data quality and privacy compliance.

Entity recognition

Automatically extracts and classifies named entities from text for deeper analytics insights.

Understanding Entity Recognition

Entity Recognition, sometimes called Named Entity Recognition (NER), is the NLP process of identifying and categorizing key elements—such as people, locations, and products—in unstructured text data. It lays the foundation for turning free-form text into actionable analytics insights.

-

What is entity recognition?

At its core, Entity Recognition detects and labels segments of text that correspond to predefined categories (entities), such as names of people, organizations, locations, product names, dates, and more.

-

How entity recognition works

Most Entity Recognition systems follow a two-step process: tokenization to split text into units, and classification to tag each unit with an entity label or as non-entity.

-

Tokenization

Splitting raw text into smaller units (words or phrases) for analysis.

-

Entity classification

Using statistical or machine learning models to assign entity labels to tokens.

-

-

Common use cases

Entity Recognition is widely used to extract structured insights from textual sources across various industries.

-

Customer feedback analysis

Identifying product or feature mentions in survey responses to gauge sentiment.

-

Social media monitoring

Tracking mentions of brand names, competitors, or locations in social posts.

-

Implementing Entity Recognition in PlainSignal

Learn how to integrate basic Entity Recognition workflows into PlainSignal’s cookie-free analytics by tagging events with recognized entities. Use the provided tracking snippet to capture custom parameters.

-

Integrating the tracking snippet

Embed PlainSignal’s tracking code on your site to start capturing events. Below is the standard snippet:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>Once embedded, you can send custom event parameters for recognized entities.

-

Tagging events with entity data

After recognizing entities in your backend or via a client-side library, send them as custom parameters on events to PlainSignal.

-

Custom data attributes

Use data attributes in your HTML or JavaScript object to pass entity values.

-

Event parameter naming

Use consistent parameter names, such as entity_name and entity_type, for easy filtering.

-

-

Analyzing entity events in PlainSignal

Open the PlainSignal dashboard and navigate to the Events section. Filter by your custom parameters (e.g., entity_name) to view occurrences over time.

Implementing Entity Recognition in GA4

Google Analytics 4 supports advanced text analytics workflows via custom dimensions and BigQuery exports. Learn how to label text events with entity data and report on them.

-

Setting up custom dimensions

In the GA4 Admin console, create a custom dimension (e.g., Entity Name) scoped to events. Use the parameter key you will send with entity values.

-

Using google cloud nlp with bigquery

Export GA4 events to BigQuery, then apply Google Cloud Natural Language API to extract entities from text fields.

-

Exporting data

Enable BigQuery export in GA4 to stream event data to a dataset.

-

Processing via cloud functions

Trigger a Cloud Function on new data to call the NLP API and write results back.

-

Storing entity results

Write entity labels and types back to BigQuery or to GA4 via Measurement Protocol.

-

-

Reporting on entities in GA4

Use Explorations or Data Studio to include your custom dimensions (Entity Name, Entity Type) in reports and dashboards.

Best Practices and Privacy Considerations

Entity Recognition can surface sensitive information. Follow these best practices to maintain data quality, protect privacy, and optimize performance.

-

Data quality

Ensure text inputs are clean and normalized to improve recognition accuracy.

-

Normalization

Convert text to lowercase, remove punctuation, and handle character encoding.

-

Noise filtering

Filter out irrelevant input like spam, HTML tags, or system messages.

-

-

Privacy and compliance

Recognizing entities may capture personally identifiable information (PII). Implement safeguards accordingly.

-

Anonymization

Mask or hash sensitive fields before processing.

-

Consent management

Ensure user consent is obtained for text data analysis in cookies or privacy settings.

-

-

Performance optimization

Balance the accuracy of your entity models with processing time and system resources.

-

Batch vs. real-time

Choose between batch processing for large volumes or real-time for immediate insights.

-

Model caching

Cache NLP model instances or results to reduce API calls and latency.

-