Published on 2025-06-26T04:23:13Z

What is Event Sampling in Analytics? Examples and Best Practices

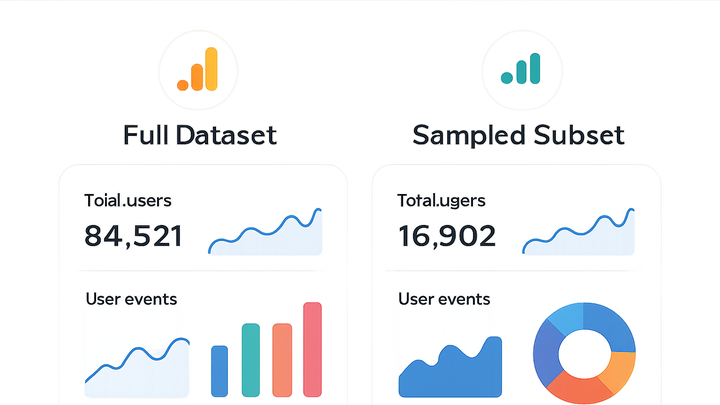

Event sampling is the process of capturing a representative subset of user interaction events rather than recording every single occurrence. In analytics, events include actions such as page views, clicks, form submissions, and video plays. By sampling events, organizations can manage data volume, optimize processing performance, and reduce storage and costs. Sampling can be implemented at different stages—during data collection or within reporting—and its proper configuration ensures statistical validity of insights. Platforms like Google Analytics 4 (GA4) and PlainSignal illustrate contrasting approaches to event sampling and data collection.

Event sampling

Event sampling is selecting a subset of user interaction events to balance data accuracy, performance, and cost in analytics.

What is Event Sampling?

Event sampling refers to the practice of recording only a portion of all events generated by users, rather than capturing every single event. This approach helps analytics tools handle high-traffic scenarios without overwhelming servers and databases.

Sampling can occur at different stages—either before sending data (collection-time) or during report generation (reporting-time). Choosing the right sampling method and rate is critical to maintain statistical reliability while controlling data volume.

-

Definition

Capturing only a subset of user interaction events (clicks, form submissions, etc.) to reduce the total volume of data collected and processed.

-

Purpose

The primary goals of event sampling are to manage large data volumes, optimize performance, and lower costs for storage and computation.

-

Volume control

By limiting the number of events stored, platforms can keep data volumes manageable.

-

Performance optimization

Less data to process leads to faster report generation and lower server load.

-

Cost efficiency

Reduced storage and processing demands translate into lower infrastructure and SaaS costs.

-

-

Types of sampling

Sampling techniques vary based on when and how events are selected for inclusion in reports.

-

Collection-time sampling

Events are filtered in the browser or tag manager before being sent to the analytics server.

-

Reporting-time sampling

Applied within the analytics engine when querying large datasets, as seen in GA4 exploration reports.

-

Why Event Sampling Matters

While sampling helps control costs and improve performance, it also introduces potential statistical trade-offs. Understanding why and when to sample is key to maintaining data integrity and actionable insights.

-

Balancing accuracy and performance

Sampling reduces system load but may introduce sampling error that affects metric accuracy.

-

Accuracy trade-off

Smaller sample sizes can distort trends if not properly sized or randomized.

-

Performance gain

Enables faster query responses and handles spikes in traffic without delays.

-

-

Use cases and limitations

Different scenarios call for different approaches to sampling or unsampled analysis.

-

High-traffic websites

Essential for sites with millions of events to avoid data overload.

-

Critical data analysis

For compliance or in-depth research, unsampled data exports (e.g., via BigQuery) are recommended.

-

How Event Sampling Works

Event sampling can be configured based on various criteria and rates. The choice of method impacts both data quality and system performance.

-

Sampling criteria

Rules that determine which events are included in the sample.

-

Random sampling

Selects events randomly according to a predefined probability.

-

User-based sampling

Limits events per user or session (e.g., first N events per session).

-

-

Sampling rate configuration

Defines the proportion of total events that are recorded.

-

Fixed rate

A constant percentage of events is sampled consistently over time.

-

Dynamic rate

Adjusts the sampling percentage based on current traffic volumes.

-

Event Sampling in GA4 and PlainSignal

Here are practical implementations of sampling (and full data collection) in Google Analytics 4 and PlainSignal.

-

GA4 sampling behavior

In GA4, sampling is applied automatically in Exploration and custom reports when session counts exceed thresholds (e.g., 10 million sessions/month). To access raw, unsampled event data, use the BigQuery export feature.

-

Sampling threshold

Default sampling limit is 10M sessions per property for Explorations.

-

Bigquery export

Enable native BigQuery integration to query unsampled, raw event data.

-

-

PlainSignal data collection

PlainSignal is designed for simple, cookie-free analytics without automatic sampling. All events are collected by default. To get started, insert this snippet into your HTML:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>For manual sampling, implement client-side logic to send only a portion of events.

-

Manual sampling example

Wrap your tracking calls in a conditional statement to record, for example, 1 in 10 visits.

-

Best Practices and Considerations

Effective sampling requires careful planning, monitoring, and documentation to ensure your insights remain reliable.

-

Choose the right sampling rate

Select a rate that balances data accuracy with system performance based on your traffic profile.

-

Pilot testing

Test different sampling rates to measure their impact on key metrics.

-

-

Monitor data quality

Regularly compare sampled data against unsampled benchmarks to detect bias or drift.

-

Document your strategy

Record your sampling approach and rationale so all stakeholders understand its limitations.

-

Use unsampled data for deep analysis

When precision is critical, switch to raw data exports or full capture methods.