Published on 2025-06-22T02:55:09Z

What is Data Sampling? Examples of Data Sampling in Analytics

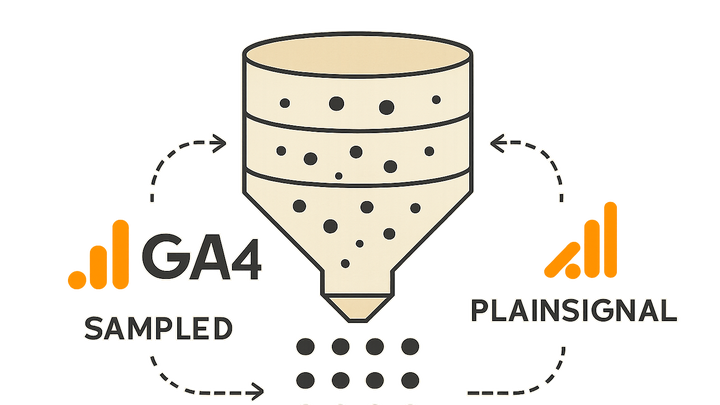

Data Sampling is the technique of selecting a subset of data from a larger dataset to generate timely and cost-effective analytics insights. In high-volume scenarios, processing every event or session can be impractical due to constraints in processing power, memory, and query time. Sampling reduces the computational load by analyzing only a representative slice of the data, then extrapolating results to the full dataset. While sampling speeds up report generation and lowers costs, it introduces potential inaccuracies and biases if not managed properly. Different analytics platforms implement sampling with varying thresholds and algorithms, affecting the precision of the insights they deliver. Understanding how sampling works—and how to mitigate its pitfalls—helps analysts choose the right tools and strategies for reliable decision-making.

Data sampling

Data Sampling selects a subset of large datasets for faster analytics at scale, balancing speed, cost, and accuracy.

Fundamentals of Data Sampling

This section introduces the core concept of data sampling in analytics, explaining why it’s employed, how it influences insights, and common approaches used across platforms.

-

Definition of data sampling

Data Sampling involves selecting a representative subset of records from a larger dataset to perform analysis more efficiently while approximating the characteristics of the full dataset.

-

Why sampling is used

Sampling reduces processing time, lowers storage and compute costs, and enables faster query performance on high-volume data streams where full-fidelity processing is impractical.

Data Sampling in Google Analytics 4 (GA4)

Google Analytics 4 applies sampling to many built-in and custom reports when query requests exceed certain event or session thresholds, trading off precision for speed in large-volume scenarios.

-

Sampling thresholds in GA4

Free GA4 properties typically begin sampling in Explorations after processing around 500k sessions; for other report types, sampling can trigger when event counts exceed 10 million per query.

-

Sampling algorithms and weighting

GA4 uses randomized algorithms that assign weights to sampled data points, then extrapolate metrics—such as user counts or conversions—across the full dataset, which can introduce margin of error.

PlainSignal's Approach to Data Sampling

PlainSignal is a cookie-free analytics tool designed for simplicity and privacy. It emphasizes unsampled, accurate reporting by processing every event without random subset selection.

-

Cookie-free, unsampled analytics

By foregoing cookies, PlainSignal simplifies tracking and privacy compliance, while processing 100% of events to deliver fully accurate metrics without sampling.

-

Performance at scale

PlainSignal’s infrastructure is optimized for lightweight, real-time processing, ensuring that even high-traffic websites get unsampled reports without long wait times or additional costs.

Impacts and Pitfalls of Data Sampling

While sampling accelerates analytics, it can distort findings and mislead decisions if not carefully monitored. This section explores common pitfalls.

-

Accuracy trade-offs

Sampling introduces statistical uncertainty; smaller samples can increase the margin of error, especially for low-frequency events or niche audience segments.

-

Bias and representativeness

Non-random patterns or missing strata in the sample can bias results, leading to over- or underestimation of key metrics.

-

Undercoverage

Occurs when the sample excludes certain user segments, such as users behind strict ad blockers or bots, skewing overall metrics.

-

Volatility in small segments

Rare user actions within small audience slices become highly volatile when sample sizes are tiny, reducing trustworthiness of segment-specific insights.

-

Best Practices to Mitigate Sampling Issues

Adopting the right strategies can help analysts reduce or eliminate unwanted sampling, ensuring more accurate, reliable insights from high-volume data.

-

Adjust sampling rate or limits

In GA4, upgrade to a higher service tier or adjust exploration query parameters to raise sampling thresholds when possible.

-

Use unsampled or raw data exports

Export raw event data to systems like BigQuery for fully unsampled analysis, leveraging SQL for custom deep dives without platform-imposed sampling.

-

Choose analytics tools with no sampling

Consider privacy-focused, lightweight analytics platforms—like PlainSignal—that process every event to provide accurate, unsampled reports by default.

Implementation Examples: GA4 vs. PlainSignal

Practical code snippets illustrate how data collection is implemented in GA4 and PlainSignal, highlighting how sampling considerations differ.

-

GA4 tracking code

Standard GA4 setup, which may lead to sampling in high-volume queries:

<!-- Google Analytics 4 --> <script async src="https://www.googletagmanager.com/gtag/js?id=GA_MEASUREMENT_ID"></script> <script> window.dataLayer = window.dataLayer || []; function gtag(){dataLayer.push(arguments);} gtag('js', new Date()); gtag('config', 'GA_MEASUREMENT_ID'); </script> -

PlainSignal tracking code

PlainSignal’s cookie-free snippet ensures every event is processed without sampling:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>