Published on 2025-06-28T02:05:47Z

What is BigQuery Export? Examples for GA4 & PlainSignal

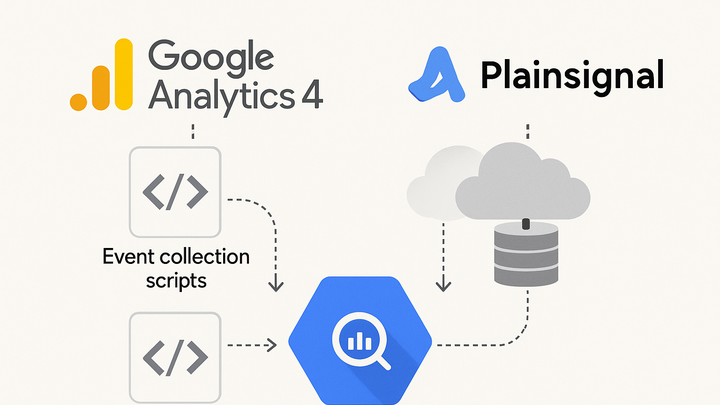

BigQuery Export is a feature of Google Analytics 4 (GA4) that streams or batches raw event data directly into Google BigQuery, Google’s serverless data warehouse. This capability provides analysts and data engineers with full access to event-level data, enabling detailed SQL-based exploration, custom reporting, and machine learning workflows. Beyond GA4, you can also integrate data from other platforms such as PlainSignal—a cookie-free analytics service—by ingesting its API logs into BigQuery using Cloud Functions or Dataflow pipelines. By centralizing data from multiple sources in BigQuery, organizations can perform cross-platform analysis, build unified customer profiles, and unlock deeper insights. Whether you’re running daily exports, streaming real-time events, or constructing custom ELT processes, BigQuery Export is a powerful foundation for any analytics infrastructure.

Bigquery export

Exports raw analytics events from GA4 and other sources (e.g. PlainSignal) into BigQuery for SQL analysis, ML, and reporting.

Why BigQuery Export Matters

BigQuery Export unlocks deeper analysis by providing direct access to raw event data, empowering teams to go beyond aggregated dashboards.

-

Access to raw data

Event-level granularity lets you analyze every interaction, from pageviews to custom events.

-

Event-level granularity

Exported data retains every event, allowing fine-grained analysis and debugging.

-

Flexible schemas

BigQuery’s columnar storage adapts to evolving data structures without rigid schemas.

-

-

Advanced analytics

Run custom SQL queries, build ML models, and join analytics data with other datasets.

-

Custom sql queries

Use standard SQL to explore user behavior, segment audiences, and generate custom metrics.

-

Ml and ai integration

Leverage BigQuery ML to train models directly on your analytics data without data movement.

-

Setting Up BigQuery Export with GA4

GA4 provides a built-in connector to BigQuery. You can choose between daily batch exports or a near-real-time streaming export to suit your analysis needs.

-

Linking GA4 to bigquery

Use the GA4 admin interface to connect your property to a BigQuery project.

-

Prerequisites

Ensure you have Editor access to the Google Cloud project and a billing account enabled.

-

Configuration steps

In GA4, navigate to Admin > BigQuery Linking, select your project, dataset location, and export frequency.

-

-

Choosing export frequency

Decide between daily batch exports and real-time streaming exports based on your reporting needs.

-

Daily export

Sends a full day’s worth of events shortly after midnight.

-

Streaming export

Streams events continuously with minimal latency (up to 30 minutes).

-

Integrating PlainSignal Data into BigQuery

While GA4 has a native BigQuery Export, you can bring data from other platforms like PlainSignal into BigQuery using custom pipelines.

-

Embedding PlainSignal tracking code

Insert the PlainSignal snippet into your site’s HTML to start collecting events.

-

Tracking code

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>

-

-

Ingesting PlainSignal events

Use Google Cloud Functions or Dataflow to fetch event logs from PlainSignal’s API and stream them into BigQuery.

-

Api data pull

Schedule a Cloud Function to call PlainSignal’s API endpoint, parse the JSON payload, and insert rows via BigQuery’s streaming API.

-

Best Practices and Tips

Ensure your BigQuery Export pipelines are cost-effective and maintainable by following these guidelines.

-

Monitor quotas and costs

Keep track of your BigQuery storage and streaming costs to avoid unexpected charges.

-

Partition tables

Use date-based partitioning to optimize query performance and reduce costs.

-

Set cost controls

Implement quotas or scripts to alert on high usage or anomalies.

-

-

Manage data schemas

Design dataset schemas that balance flexibility with consistency.

-

Schema updates

Use BigQuery’s schema auto-detect or manual drift management to handle new event parameters.

-

Data retention

Set appropriate table expiration and archival policies to control long-term storage.

-