Published on 2025-06-26T04:47:53Z

What is Batch Processing? Examples in Web Analytics

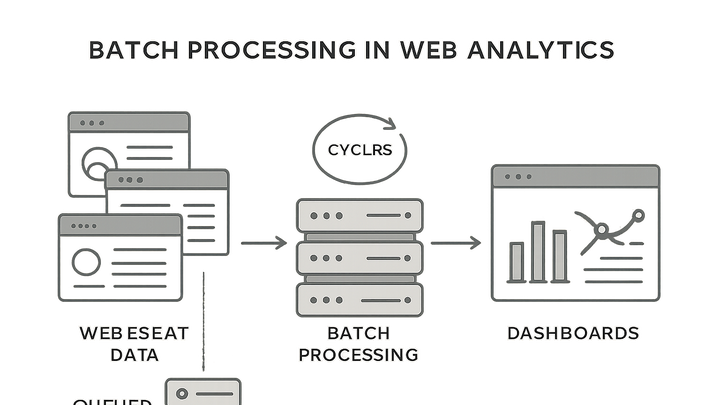

Batch Processing in the analytics industry refers to the collection, aggregation, and processing of large volumes of event data at scheduled intervals rather than continuously. In web analytics, batch jobs often run every few minutes, hours, or days to transform raw tracking logs into structured reports. This approach helps optimize resource usage, enables complex transformations, and reduces operational overhead compared to real-time processing. However, it introduces latency between data collection and availability, which may not suit use cases requiring immediate insights. Leading analytics platforms like PlainSignal and Google Analytics 4 (GA4) leverage batch processing to efficiently handle high-throughput tracking, offering automated pipelines that ensure data accuracy and consistency. Understanding batch processing is essential for designing scalable, cost-effective analytics pipelines that meet diverse business requirements.

Batch processing

Batch processing collects and processes web analytics data in scheduled groups for efficient, large-scale reporting.

Understanding Batch Processing

This section introduces the core concept of batch processing, contrasting it with real-time streaming to highlight its unique characteristics and use cases in web analytics.

-

Definition of batch processing

Batch processing involves collecting events or log data over a fixed period and then processing the entire collection together. This method prioritizes throughput and resource efficiency over immediate data availability.

-

Batch vs real-time processing

Whereas batch processing runs jobs on accumulated data at scheduled intervals, real-time (stream) processing handles each event as it occurs, delivering instant insights.

-

Latency

Batch introduces latency between event occurrence and reporting, while streaming minimizes this delay.

-

Complexity

Batch pipelines are generally simpler to build and maintain than continuous streaming systems.

-

Resource utilization

Batch can optimize resource usage by processing large volumes in bulk, reducing the overhead of per-event operations.

-

Batch Processing in Analytics Platforms

Explore how modern analytics tools implement batch pipelines to collect, transform, and load data, ensuring scalability and reliability for high-traffic websites.

-

Data collection and aggregation

Platforms like PlainSignal and GA4 gather raw event data via tracking scripts or APIs and buffer it in storage before the scheduled processing job.

-

Data transformation and loading

During a batch run, raw events are cleaned, enriched, and aggregated into structured tables or reports, which are then loaded into dashboards or data warehouses.

Benefits and Limitations

Identify the trade-offs of batch processing, understanding when its advantages outweigh its drawbacks for various analytics scenarios.

-

Benefits

Batch processing delivers predictable performance and cost efficiency for large-scale analytics workloads.

-

Cost efficiency

Bulk operations reduce compute overhead, lowering infrastructure costs.

-

Reliability

Isolated batch jobs simplify error handling and retries compared to continuous streams.

-

Complex transformations

Allows extensive data enrichment and complex calculations that might be infeasible in real time.

-

-

Limitations

Despite its strengths, batch processing may not suit scenarios requiring immediate data updates.

-

Data freshness

Built-in latency delays insights until the next scheduled run completes.

-

Batch failures

Errors in a batch job can hold up an entire run, affecting large data windows.

-

Implementing Batch Processing with PlainSignal and GA4

Concrete examples showing how to set up batch processing workflows using PlainSignal’s cookie-free analytics and GA4’s data import features.

-

PlainSignal batch setup

PlainSignal buffers events client-side and sends them in batched requests to its API. Include the tracking code in your site header:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>Events are queued and processed in periodic batches, ensuring lightweight, cookie-free tracking with minimal server load.

-

GA4 offline data import

Google Analytics 4 supports batch uploads via the Measurement Protocol and the GA4 Data Import feature. You can prepare CSV files of offline events and schedule daily imports to enrich your analytics reports.