Published on 2025-06-22T07:38:01Z

What is Event Batching? Examples in Analytics

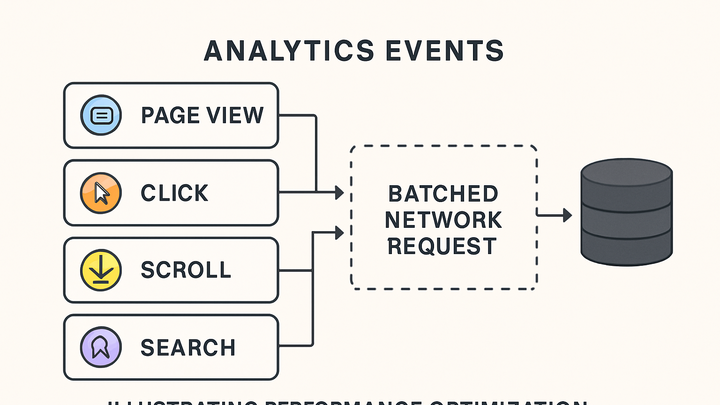

Event batching is a crucial technique in analytics for optimizing data collection and transmission. Instead of sending each user interaction (like page views, clicks, form submissions) as an individual HTTP request, events are temporarily stored in a buffer and released as a single payload based on configurable criteria such as time interval, event count, or payload size. This practice drastically reduces network overhead and improves page performance, especially on mobile devices or slow networks. Analytics providers like Google Analytics 4 (GA4) and privacy-focused solutions like PlainSignal support batch processing to ensure both data accuracy and efficiency. Understanding how event batching works, its trade-offs, and implementation strategies can help analysts and engineers design more resilient and performant tracking systems.

Event batching

Aggregating multiple analytics events into single requests to optimize network performance and reduce bandwidth usage.

Overview of Event Batching

Event batching is the process of collecting multiple user interactions or tracking events and sending them together in a single network request rather than one at a time. This approach reduces the number of HTTP requests, lowers latency, and conserves bandwidth. It’s widely used in web analytics to manage high event volumes without impacting page performance. Batching also helps maintain data consistency by grouping related events. Many analytics platforms support configurable batching thresholds for optimal balance between real-time insights and performance.

-

Definition of event batching

Combining several analytics events into a single payload to minimize network overhead.

-

Key benefits

Reduces the number of HTTP calls, improves page load speeds, and conserves mobile data utilization.

-

Reduced http requests

Less frequent network calls decrease latency and server load.

-

Improved page performance

Fewer requests free up browser resources, speeding up user experience.

-

Bandwidth efficiency

Aggregated data uses less mobile or limited bandwidth.

-

-

Trade-offs and considerations

While batching improves performance, it can introduce slight delays in data availability and complicate debugging of individual events.

Mechanisms of Event Batching

Event batching typically relies on triggers based on event count, time intervals, or payload size. Clients accumulate events until a threshold is met (e.g., a set number of events or a time delay), then dispatch the batch. On the server side, some systems re-batch or buffer events for processing. Batching strategies must balance timely data delivery with performance overhead. Additionally, web pages use unload events or sendBeacon as fallback to flush remaining events when a page is closed.

-

Client-side batching strategies

Threshold triggers on the client determine when to flush a batch. Common strategies include:

-

Count-based

Flush when a specified number of events is reached.

-

Time-based

Flush events at regular intervals (e.g., every 5 seconds).

-

Size-based

Flush when the payload size exceeds a limit.

-

-

Delivery mechanisms

After batching, events are sent using optimized transport methods:

-

Http post

Standard method for sending JSON payloads to analytics endpoints.

-

Sendbeacon api

Ideal for flushing data during page unload without blocking navigation.

-

Fetch with keepalive

Allows background requests after page unload.

-

Analytics Platform Implementations

Major analytics platforms offer built-in or configurable event batching to handle tracking volume effectively. Below are examples for Google Analytics 4 (GA4) and PlainSignal.

-

Google analytics 4 (GA4)

GA4’s Measurement Protocol supports batching multiple events in a single HTTP request. Use the batch endpoint by sending a JSON array of event objects.

-

Measurement protocol batch request

POST /mp/collect?measurement_id=G-XXXX&api_secret=SECRET Content-Type: application/json { "client_id":"12345.67890", "events":[ {"name":"page_view","params":{}}, {"name":"button_click","params":{"label":"signup"}} ] }

-

-

PlainSignal

PlainSignal automatically batches events by default to reduce network calls. Integrate by adding their lightweight script to your page.

-

Tracking snippet

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>

-

Best Practices and Considerations

When implementing event batching, adhere to best practices to optimize performance and data reliability.

-

Optimizing thresholds

Choose appropriate batch size and intervals based on user behavior and network conditions.

-

Analyze event volume

Monitor average event rates to set realistic thresholds.

-

User experience balance

Ensure batching delays don’t degrade real-time analytics needs.

-

-

Error handling and retries

Implement retry logic for failed batches and fallback mechanisms to prevent data loss.

-

Exponential backoff

Use increasing intervals between retry attempts to avoid server overload.

-

Fallback transport

Switch to sendBeacon or fetch keepalive for critical data on page unload.

-

-

Compliance and privacy

Ensure batched data respects user privacy agreements and GDPR/CCPA regulations.

-

Data minimization

Only include necessary event parameters to reduce exposure.

-

Consent management

Batch only after obtaining user consent where required.

-