Published on 2025-06-22T09:34:33Z

What is Session Sampling? Examples of Session Sampling in Analytics

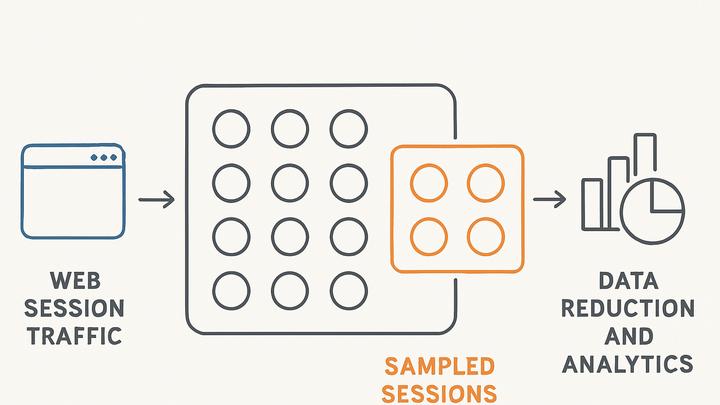

Session Sampling is the process of processing and analyzing only a subset of user sessions rather than every session captured by an analytics platform. This approach helps organizations control data volume, reduce query latency, and manage processing costs when dealing with high-traffic websites. In high-scale analytics systems like Google Analytics 4 (GA4), sampling is applied when report queries exceed certain thresholds, resulting in partial data being extrapolated to represent the whole. Conversely, lighter-weight platforms such as PlainSignal may operate with minimal or no sampling by design, thanks to their simplified, privacy-focused data collection model.

By selectively sampling sessions rather than hits or events, analysts can achieve a balance between performance and accuracy. While sampling introduces a margin of error, careful configuration of sampling rates and awareness of associated biases allow teams to draw meaningful insights without being overwhelmed by raw data volume. Below, we explore the types of session sampling, how GA4 implements it, PlainSignal’s approach, and best practices for working with sampled session data.

Session sampling

Selectively process a subset of user sessions to balance performance, cost, and accuracy in web analytics.

Definition and Purpose

Session Sampling reduces the total number of user sessions processed by selecting only a portion according to a defined rate or rule. This section explains why sampling is needed and the core objectives.

-

Definition

The act of analyzing a subset of user sessions instead of the full dataset. This helps limit data volume and speed up query times.

-

Core objectives

Reasons organizations implement session sampling and the benefits it provides.

-

Manage data volume

Reduces the total number of sessions stored and processed to keep platforms performant.

-

Improve query performance

Lowers the time required to run reports by working on a smaller dataset.

-

Control costs

Limits processing and storage costs, especially in pay-as-you-go analytics services.

-

-

Sampling types

Different methods used to select which sessions to include in analysis.

-

Random sampling

Sessions are chosen randomly according to a fixed rate, such as 10 percent.

-

Systematic sampling

Every Nth session is selected, ensuring even distribution across the timeline.

-

Adaptive sampling

Sampling rates adjust dynamically based on traffic volume or specific thresholds.

-

Session Sampling in Google Analytics 4

Google Analytics 4 applies session sampling on high-volume queries when data thresholds are exceeded. Sampled reports use extrapolation to estimate metrics for the full dataset.

-

Implementation details

GA4 begins sampling when a report query exceeds certain limits, such as 500k events in the UI or 100 million sessions in BigQuery exports. Sampled metrics are scaled to represent the full dataset.

-

Configuration

Users cannot directly configure sampling rates in GA4; sampling thresholds and methods are determined by Google’s internal algorithms.

-

Limitations

Sampling introduces statistical error. Small segments, high-cardinality dimensions, or complex filters can reduce accuracy significantly.

PlainSignal's Cookie-Free Sampling Approach

PlainSignal focuses on privacy and simplicity by collecting essential session data without using cookies. Its streamlined architecture often avoids sampling for typical traffic volumes.

-

Minimal or no sampling

PlainSignal processes most sessions in full by default, as its cookie-free, event-light approach generates lower data volumes.

-

Practical implications

With nearly complete session data, PlainSignal provides high accuracy without sampling bias. However, extremely high traffic sites may need rate limits.

-

Integration example

Embed the PlainSignal tracking snippet to start collecting session data:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>

Impact on Data Accuracy

Sampling reduces data volume but affects the reliability of insights. Analysts must understand sampling error and ways to mitigate bias and variance.

-

Bias and variance

Sampling introduces statistical error: bias arises if the sample is not representative, while variance increases as sample size decreases.

-

Mitigation strategies

To reduce error, increase sample rates for critical analyses, use rolling time windows, and cross-check sampled results against full or unsampled data when possible.

Best Practices

Guidelines to optimize session sampling settings and maintain analytical integrity.

-

Choose appropriate sampling rates

Balance performance and accuracy: use higher sampling rates for key reports and lower rates for exploratory analysis.

-

Validate sampled data

Regularly compare sampled metrics to unsampled or raw datasets to quantify and understand sampling error.

-

Document sampling settings

Record which reports use sampling, the rates applied, and any date or segment filters to ensure transparency.