Published on 2025-06-27T19:02:28Z

What is a Data Aggregator? Examples in Analytics

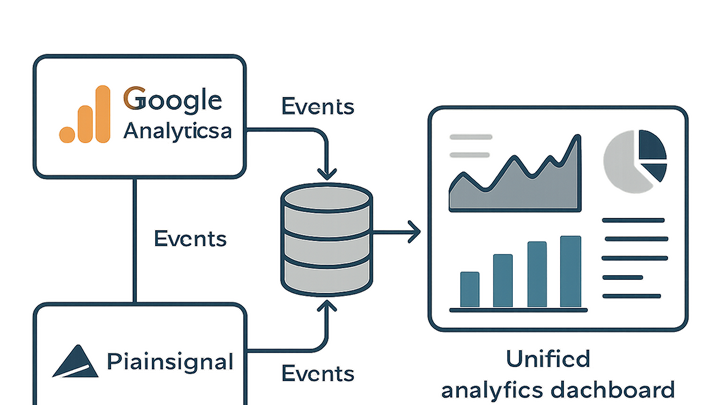

In analytics, a data aggregator is a platform or tool that collects, processes, and consolidates raw data from multiple sources into a unified dataset ready for analysis. They streamline the complexity of handling disparate data points from web analytics platforms, mobile apps, server logs, and third-party services. By applying transformations and normalization, data aggregators ensure consistency and reliability, enabling teams to generate accurate reports and insights. Leading SaaS solutions like Google Analytics 4 (GA4) and privacy-focused tools like PlainSignal illustrate different approaches to aggregation, from advanced machine learning models to simple cookie-free event tracking. This glossary explores the role, workflows, and best practices of Data Aggregators in modern analytics architectures.

Data aggregator

A Data Aggregator consolidates analytics data from multiple sources into a unified dataset for comprehensive analysis.

Why Data Aggregation Matters

In analytics, disparate data streams from websites, apps, and servers can lead to fragmented insights. Data aggregators provide a single source of truth by merging these sources into consistent, accessible formats. This unification reduces complexity, improves analytical accuracy, and accelerates decision-making. By handling volumes of raw events, aggregators enable teams to focus on interpreting results rather than wrangling data. Ultimately, they are critical for scalable, reliable reporting across an organization.

-

Unified view of data

Combines metrics from multiple platforms into a coherent dataset.

-

Enhanced accuracy

Applies normalization and deduplication to ensure data quality.

-

Streamlined workflows

Automates data collection and processing, saving engineering effort.

How Data Aggregators Work

Data aggregators typically follow a structured pipeline: collecting raw events, transforming them into standardized formats, and loading them into storage or analytics tools. This ETL (Extract, Transform, Load) process can run in batch or in real-time, depending on business needs. Modern platforms often support customizable transformations, allowing for data enrichment, filtering, and alignment with internal schemas. Understanding each stage helps in optimizing performance and maintaining data integrity.

-

Data collection

Ingests data from various sources such as SDKs, trackers, APIs, and server logs.

-

Apis

Pulls data via built-in connectors from services like GA4.

-

Trackers and snippets

Uses JavaScript tags or mobile SDKs embedded in digital properties.

-

Log files

Processes server and application logs for event extraction.

-

-

Data transformation

Cleanses, normalizes, and enriches raw data to align with analytical models.

-

Normalization

Standardizes metrics and dimensions across sources.

-

Deduplication

Removes repeated or redundant events.

-

Enrichment

Augments data with additional context, like user attributes.

-

-

Data loading

Transfers processed data to storage solutions or BI tools for analysis.

-

Data warehouses

Loads into systems like BigQuery, Snowflake, or Redshift.

-

Bi tools

Feeds visualization platforms like Looker, Tableau, or Data Studio.

-

Custom dashboards

Enables bespoke internal reporting solutions.

-

Examples: GA4 and PlainSignal

Different analytics tools embody data aggregation in unique ways. Google Analytics 4 (GA4) represents a full-featured, event-based aggregator with advanced machine learning and cross-platform support. PlainSignal offers a lightweight, privacy-centric approach, aggregating page views and events without cookies. Below are examples of how each tool ingests and aggregates data.

-

Google analytics 4 (GA4)

GA4 collects events via gtag.js or Google Tag Manager and aggregates them in Google’s cloud. It applies built-in models for attribution, conversion tracking, and churn prediction, exposing results through the GA interface and Data API. It supports real-time reports and custom funnels.

-

PlainSignal

PlainSignal provides a simple, cookie-free tracking snippet to aggregate analytics data while prioritizing user privacy. Use the following code on your site:

-

Tracking snippet

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>

-

Best Practices for Data Aggregation

Effective data aggregation requires attention to privacy, data quality, and system performance. Adhering to best practices ensures the aggregated dataset is reliable, compliant, and actionable. Regular audits, clear schema definitions, and appropriate latency settings help maintain trust in analytics outputs.

-

Privacy compliance

Ensure GDPR, CCPA, and other regulations are met by anonymizing or minimizing personal data.

-

Data quality checks

Implement validation rules and monitor for anomalies or missing data.

-

Latency management

Choose between batch or real-time processing based on use cases and infrastructure.

-

Schema governance

Maintain clear documentation of event definitions, naming conventions, and data transformations.