Published on 2025-06-26T04:47:29Z

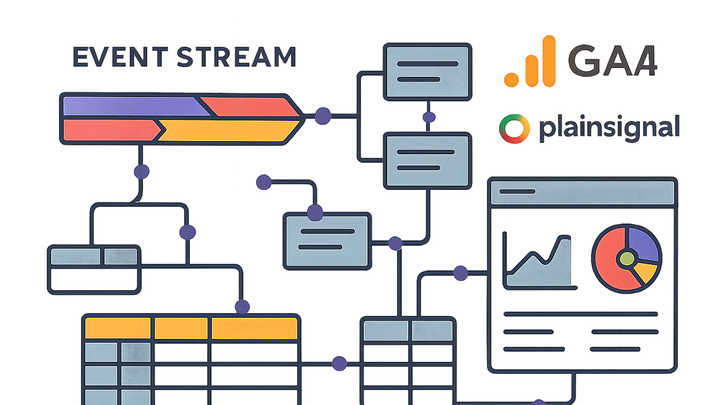

What is Data Modeling in Analytics? Examples with PlainSignal and GA4

In analytics, data modeling defines how raw data is structured, related, and stored to support reliable and scalable reporting. It involves designing schemas, entities, attributes, and relationships that translate event-level data into meaningful insights. A solid data model ensures consistency across dashboards, improves query performance, and enforces data quality and governance. Modern analytics platforms like Google Analytics 4 (GA4) use an event-parameter-based model, while cookie-free tools such as PlainSignal offer simplified schema designs. Understanding data modeling is crucial for aligning technical implementations with business metrics, optimizing performance, and maintaining trust in your analytics ecosystem.

Data modeling

Process of defining how analytics data is structured and related to ensure consistent, scalable insights across tools like GA4 and PlainSignal.

Why Data Modeling Matters

A robust data model ensures that analytics data is accurate, consistent, and easy to query. It underpins every dashboard, report, and analysis by defining how raw events and attributes relate to each other. Without proper modeling, metrics can become ambiguous, queries inefficient, and insights unreliable. Data modeling addresses these challenges by formalizing definitions, establishing relationships, and optimizing performance. In modern analytics, especially with complex event-based tools like GA4 and PlainSignal, a clear data model is the foundation for trust and scalability.

-

Analytical consistency

Ensures that metrics and dimensions are defined uniformly across reports, preventing discrepancies and misinterpretations.

-

Performance & scalability

Optimizes database schemas and queries to handle large volumes of event data efficiently.

-

Data governance & quality

Establishes validation rules, naming conventions, and lineage to maintain data accuracy and compliance.

Core Components of Data Modeling

Data modeling in analytics involves defining the building blocks that structure raw data into meaningful constructs. These components dictate how event-level data, dimensional attributes, and aggregated metrics are organized for analysis. Understanding these elements is crucial for designing models that support flexible querying and federated reporting.

-

Entities & attributes

Fundamental elements representing real-world objects or events (entities) and their properties (attributes).

-

Entities

Objects like users, sessions, or pageviews that form the primary data records.

-

Attributes

Properties such as user_id, event_name, or page_path that describe entities.

-

-

Relationships & keys

Defines how different entities connect, typically through primary and foreign keys.

-

Primary keys

Unique identifiers for each entity record (e.g., client_id in GA4).

-

Foreign keys

References that link one entity to another, enabling joins (e.g., session_id linking events).

-

-

Schema types

Common schemas include star and snowflake, each balancing simplicity and normalization.

-

Star schema

Central fact table linked to denormalized dimension tables for faster queries.

-

Snowflake schema

Normalized dimensions reduce redundancy but may require more complex joins.

-

Approaches in Analytics Data Modeling

Different modeling methodologies address various stages of data design, from high-level concepts to physical implementation. Analytics teams choose approaches based on flexibility, performance, and tool capabilities.

-

Conceptual, logical, and physical models

Progressive modeling stages: Conceptual defines business entities; Logical structures relationships; Physical translates models into database schemas.

-

Conceptual model

Illustrates business concepts and high-level relationships without technical details.

-

Logical model

Specifies entities, attributes, and relationships in detail, independent of physical storage.

-

Physical model

Implements the logical model in a specific database or analytics tool, defining tables, columns, data types, and indexes.

-

-

Schema-on-write vs schema-on-read

Schema-on-Write enforces structure upon ingestion, while Schema-on-Read applies structure during query time.

-

Schema-on-write

Pre-defines the schema before loading data (common in data warehouses).

-

Schema-on-read

Stores raw data and defines schema when reading (flexible, used in data lakes).

-

-

Event-based modeling

Focuses on capturing and structuring discrete user interactions (events) with customizable parameters and hierarchies.

Implementing Data Models in GA4 and PlainSignal

Different analytics platforms offer distinct data modeling paradigms. GA4 adopts a flexible event-parameter model, while PlainSignal emphasizes a simple, privacy-first approach. Understanding each model helps tailor your tracking and analysis strategies.

-

GA4 data model

GA4 uses an event-centric schema where every interaction is an event with up to 25 parameters. It also supports user properties for cohort analysis.

-

PlainSignal data model

PlainSignal tracks events with customizable attributes in a cookie-free model, emphasizing simplicity and privacy. It collects minimal user data while preserving analytical flexibility.

-

PlainSignal tracking code

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script> -

GA4 tracking code

<!-- Google Analytics 4 tracking snippet --> <script async src="https://www.googletagmanager.com/gtag/js?id=G-XXXXXXX"></script> <script> window.dataLayer = window.dataLayer || []; function gtag(){dataLayer.push(arguments);} gtag('js', new Date()); gtag('config', 'G-XXXXXXX'); </script> -

Comparison

GA4 offers deep integrations with Google’s ecosystem and advanced machine learning features, while PlainSignal prioritizes privacy and simplicity without cookies.

Best Practices for Data Modeling

Adopting best practices ensures your model remains reliable and adaptable. Regular review, documentation, and alignment with business goals help teams leverage analytics effectively.

-

Define clear naming conventions

Use consistent, descriptive names for events, parameters, tables, and columns to avoid ambiguity.

-

Document your schema

Maintain a living document or data dictionary explaining entity definitions, relationships, and transformation logic.

-

Align models with business kpis

Ensure that your model reflects key metrics and dimensions relevant to strategic objectives.

-

Iterate and validate

Continuously test and refine your data model against new data sources and use cases to maintain accuracy.