Published on 2025-06-27T20:57:29Z

What is Data Processing? Examples for Data Processing in Analytics.

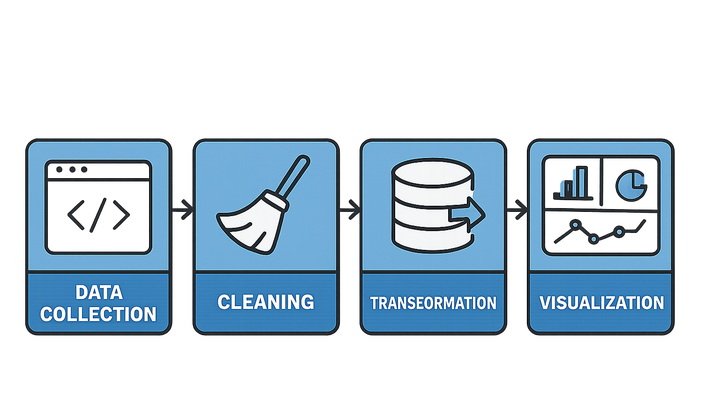

Data processing in analytics refers to the sequence of steps by which raw data is collected, cleansed, transformed, and analyzed to produce meaningful insights. It ensures that insights drawn from tracking user events—whether through Google Analytics 4’s gtag.js snippet or PlainSignal’s cookie-free script—are accurate, reliable, and actionable.

The process typically begins with data collection using snippets such as:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin />

<script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>

Once captured, data undergoes cleaning to remove duplicates and correct errors. It is then transformed—aggregated, normalized, and enriched—before final analysis and visualization in tools like GA4 or PlainSignal dashboards. Effective data processing balances speed (real-time vs. batch), quality, and privacy compliance (GDPR, CCPA), enabling teams to monitor performance, detect anomalies, and drive optimizations with confidence.

Data processing

Converting raw event data into accurate, actionable insights through collection, cleaning, transformation, and analysis.

The Data Processing Lifecycle

Data processing in analytics converts raw user interactions into structured information through a series of stages. Each stage refines the data to ensure accuracy and relevance before insights are derived.

-

Data collection

Capturing raw events from websites or apps via tracking code such as GA4’s gtag.js snippet or PlainSignal’s cookie-free script. Correct placement and configuration are critical for complete data capture.

-

Data cleaning

Identifying and removing duplicates, filling missing values, and correcting malformed entries to maintain data integrity.

-

Data transformation

Converting and enriching data—aggregating metrics, normalizing formats, and mapping event names—to prepare for analysis.

-

Analysis & visualization

Generating reports, dashboards, and alerts in analytics platforms like GA4 or PlainSignal to surface key metrics and trends.

Real-Time vs. Batch Processing

Analytics teams choose between processing data in real time or in scheduled batches based on use cases, performance, and resource considerations.

-

Real-time processing

Processes data immediately upon capture, enabling live dashboards and instant anomaly detection—used for monitoring active campaigns or website health.

-

Use cases

Live traffic monitoring, A/B test validation, server health checks.

-

Limitations

Higher compute cost and potential constraints on complex transformations.

-

-

Batch processing

Accumulates data and processes it at predefined intervals, ideal for heavy transformations, historical analysis, and generating periodic reports.

-

Use cases

Daily performance reports, trend analysis, financial reconciliation.

-

Limitations

Latency between event capture and availability can delay insights.

-

Ensuring Data Quality and Privacy

Reliable analytics requires both accurate data and adherence to privacy regulations. Implementing best practices safeguards user trust and compliance.

-

Data accuracy

Validate event triggers, deduplicate data, and filter out bot traffic. Tools like PlainSignal include built-in filtering to enhance accuracy.

-

Privacy compliance

Adopt cookie-free or first-party tracking methods, anonymize IP addresses, and follow GDPR/CCPA guidelines. GA4 and PlainSignal both offer features to help maintain compliance.