Published on 2025-06-28T06:28:07Z

What is Experimental Design? Examples and Best Practices

Experimental Design in analytics refers to the systematic process of planning, executing, and analyzing controlled tests that compare different versions of a product, feature, or experience to determine which performs best. It involves defining clear hypotheses, identifying independent and dependent variables, establishing control and treatment groups, and applying statistical methods to ensure observed differences are significant and not due to chance. A robust design minimizes biases and confounding factors, yielding reliable insights that drive data-driven decisions. Platforms like Google Analytics 4 and PlainSignal facilitate setup, tracking, and analysis of experiments. By following best practices—adequate sample size, proper randomization, and sufficient test duration—teams can optimize user experiences and business outcomes with confidence.

Experimental design

A systematic approach to planning, running, and analyzing tests in analytics to derive statistically valid insights.

Introduction to Experimental Design

An overview of the fundamental principles and importance of experimental design in analytics.

-

Hypothesis formulation

Defining a clear, testable hypothesis that predicts how changes will affect user behavior or key metrics.

-

Null hypothesis

Assumes there is no effect or difference between variants.

-

Alternative hypothesis

Specifies the expected effect or difference to be tested.

-

-

Variable identification

Distinguishing between independent, dependent, and controlled variables to structure the experiment.

-

Independent variable

The element you manipulate, such as button color.

-

Dependent variable

The outcome measured, such as click-through rate.

-

Controlled variables

Factors kept constant to prevent confounding effects.

-

-

Randomization and control

Ensuring unbiased assignment of users to control and treatment groups.

-

Random assignment methods

Techniques like hashing user IDs or using existing user attributes.

-

Control group

A baseline group that experiences the original version.

-

Types of Experimental Designs

Different structures of experiments suited for various testing needs and complexity levels.

-

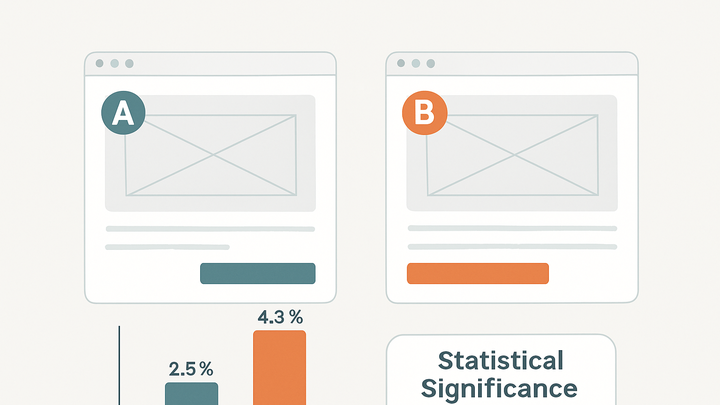

A/b testing

Compares two versions (A vs. B) to see which performs better.

-

Multivariate testing

Simultaneously tests multiple elements to identify the best combination.

-

Factorial design

Tests multiple factors and their interactions at different levels.

Implementing Experiments with Analytics Tools

How to set up and run experiments using popular SaaS analytics platforms.

-

PlainSignal (cookie-free analytics)

Use PlainSignal to deploy experiments without relying on cookies, ensuring user privacy and compliance.

-

Setup tracking code

Include the following snippet in your HTML to start tracking:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script> -

Define event goals

Configure events, such as clicks or conversions, to measure experiment outcomes.

-

Segment users

Assign users randomly to control or treatment groups within the PlainSignal dashboard.

-

-

Google analytics 4 (GA4)

Leverage GA4’s built-in experimentation features to run and analyze tests.

-

GA4 tracking setup

Add this snippet to enable GA4 tracking:

<script async src="https://www.googletagmanager.com/gtag/js?id=GA_MEASUREMENT_ID"></script> <script> window.dataLayer = window.dataLayer || []; function gtag(){dataLayer.push(arguments);} gtag('js', new Date()); gtag('config', 'GA_MEASUREMENT_ID'); </script> -

Create experiments

Use the ‘Experiments’ section in GA4 to set up A/B or multivariate tests.

-

Analyze results

Review test reports in GA4 to determine statistical significance and impact.

-

Best Practices and Common Pitfalls

Recommendations to maximize the validity of your experiments and avoid common errors.

-

Sample size and statistical power

Calculate the minimum sample needed to detect meaningful differences and achieve reliable results.

-

Test duration

Run experiments long enough to account for variations in traffic and user behavior.

-

Avoiding bias

Prevent selection and measurement biases through proper randomization and consistent data collection.

-

Data privacy and compliance

Ensure experiments adhere to privacy laws and internal policies, especially when using user data.

Real-World Example: Landing Page Button Color Test

A step-by-step walkthrough of designing, implementing, and analyzing an A/B test for a landing page button.

-

Scenario setup

An e-commerce site wants to test whether a red ‘Buy Now’ button yields a higher click-through rate than a blue one.

-

Implementation in PlainSignal

Use PlainSignal’s event tracking to record clicks for each variant and ensure random user assignment.

-

Data analysis

Compare click-through rates, calculate statistical significance, and decide whether to adopt the new button color.