Published on 2025-06-22T04:33:43Z

What is Preprocessing in Analytics? Examples and Use Cases

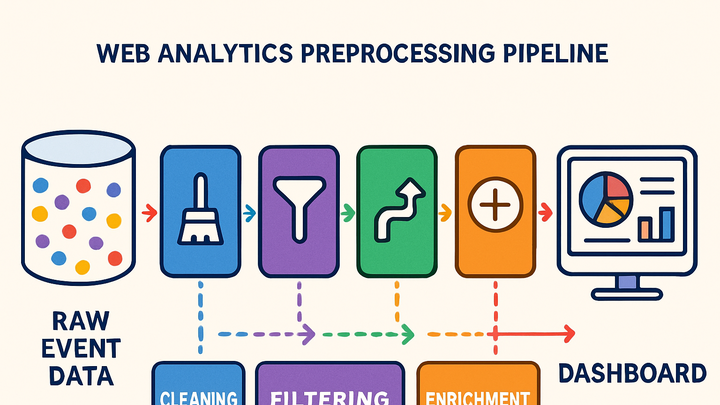

Preprocessing in analytics involves cleaning, transforming, and validating raw data collected from websites and apps before it’s used for reporting and analysis. This ensures that the data feeding into dashboards and models is accurate, consistent, and free from noise such as bot traffic or malformed events. Platforms like GA4 and PlainSignal support preprocessing in different ways: GA4 offers built-in filters and event modifications, while PlainSignal (a cookie-free analytics tool) focuses on straightforward data collection with minimal configuration. Typical preprocessing tasks include:

- Data cleaning (removing duplicates, correcting typos)

- Data transformation (normalizing formats, mapping values)

- Data enrichment (adding metadata like geolocation or user segments)

By standardizing data early, organizations can trust their metrics, speed up query performance, and comply with privacy regulations like GDPR. Preprocessed data becomes the reliable foundation for dashboards, A/B testing, and predictive analytics.

Preprocessing

Cleaning and transforming raw analytics data for reliable, compliant reporting and analysis.

Definition and Purpose

This section explains what preprocessing is and why it’s a crucial first step in analytics workflows.

-

Overview

Preprocessing in analytics is the initial phase where raw data collected from websites, apps, and servers is prepared for downstream processing and analysis. It involves techniques to ensure data quality, consistency, and compliance before metrics are computed and visualized.

Key Steps in Data Preprocessing

Preprocessing involves several stages to clean and standardize data. Each step helps improve the reliability of analytics reports.

-

Data validation

Ensures incoming events match predefined schemas and that required fields are present and correctly formatted.

-

Schema enforcement

Verifies that events conform to a specified schema, catching missing or unexpected parameters.

-

-

Data cleaning

Removes invalid, duplicate, or bot-generated events to avoid skewed analysis.

-

Duplicate removal

Detects and discards repeated events triggered by user retries or network issues.

-

Bot filtering

Applies filters to exclude known bot or crawler traffic based on user agents and IP addresses.

-

-

Data transformation

Converts and normalizes data formats, maps parameter names, and applies business rules.

-

Normalization

Standardizes date/time, currency, and unit formats for uniformity.

-

Parameter mapping

Renames or remaps event parameters to internal naming conventions.

-

-

Data enrichment

Adds additional context such as geolocation, device type, or user segments to make data more actionable.

Preprocessing in PlainSignal and GA4

Different analytics platforms offer unique preprocessing capabilities. Here’s how PlainSignal and Google Analytics 4 handle preprocessing.

-

PlainSignal preprocessing

PlainSignal focuses on simplicity and privacy by default. It preprocesses data without relying on cookies and implements lightweight bot filtering and deduplication. To integrate PlainSignal, add the tracking snippet below:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script> -

GA4 preprocessing

Google Analytics 4 offers advanced preprocessing options in the admin interface, including event modification, exclusion filters (e.g., internal traffic), and conversions setup. You can modify incoming events using the “Modify event” feature or leverage GTM custom tags for additional transformations.

Best Practices

Adopting best practices ensures that preprocessing scales with your data needs and maintains high data quality.

-

Automate workflows

Use scripts or ETL tools to automate repetitive preprocessing tasks, reducing manual errors and freeing up analyst time.

-

Monitor data quality

Implement dashboards and alerts to catch anomalies such as sudden drops in event volume or spikes in invalid data.

-

Document processes

Maintain clear documentation of preprocessing rules and schema definitions so teams understand data lineage and transformations.

Common Challenges

Despite best efforts, preprocessing can face hurdles that compromise data integrity if not addressed.

-

Incomplete or missing data

Network failures or misconfigured tags can lead to gaps in data. Implement retry logic and tagging audits to prevent losses.

-

Over-transformation

Excessive normalization or enrichment can strip data of its original context. Balance transformations to retain meaningful details.