Published on 2025-06-22T05:58:00Z

What is a Data Sink in Analytics? Examples & Best Practices

Data Sink in analytics refers to the final destination where transformed and enriched event data is stored or consumed. It comes after data collection and processing stages. Examples of sinks include:

- Cloud data warehouses (e.g., Google BigQuery via GA4 BigQuery Export).

- Real-time analytics platforms (e.g., PlainSignal for cookie-free tracking).

- Data lakes or custom HTTP endpoints.

Selecting the right sink helps ensure data accessibility, performance, cost control, and compliance with data regulations. Proper sink configuration ensures reliable data flows, scalability, and adherence to privacy requirements.

Sink

Final destination for processed analytics data—e.g., BigQuery or PlainSignal—where events are stored or analyzed.

Overview of Data Sinks

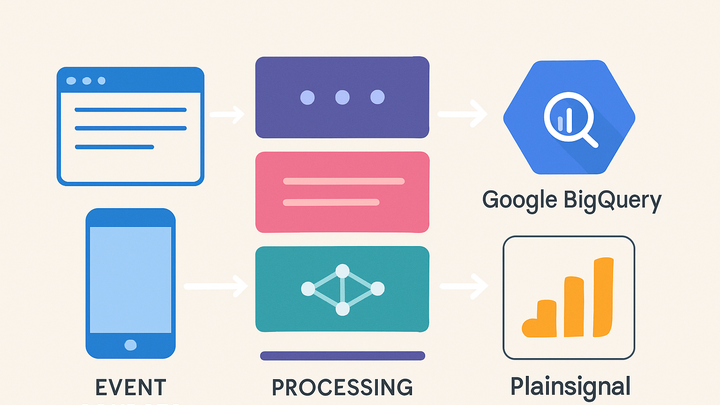

A data sink is the endpoint in an analytics pipeline where events, logs, and metrics are stored or consumed. It contrasts with a data source, which generates raw data. Understanding sinks helps ensure that data is properly captured, retained, and made available for downstream analysis.

-

Definition and role

Describes what a sink is and its function within a data pipeline, serving as the final stage for data delivery.

-

Data pipeline context

Explains how sinks fit into end-to-end data flows, following event collection and processing.

-

Event emission

Raw events emitted by websites or apps are collected by tracking code or SDKs.

-

Processing layer

Data is enriched or transformed via ETL or streaming systems before reaching the sink.

-

Storage or analysis

The sink holds data for querying, reporting, or further processing by analytics tools.

-

Why Sinks Matter in Analytics

The choice of sink impacts data accessibility, query performance, cost, and compliance. Different sinks offer trade-offs between real-time insight and storage capabilities.

-

Data accessibility

Sinks determine how quickly and flexibly analysts can query and visualize data.

-

Scalability and cost

Different sinks have varying performance characteristics and pricing models at scale.

-

Privacy and compliance

Some sinks provide features like data masking or retention policies to help meet regulatory requirements.

Common Sink Types and Integrations

Analytics sinks come in various forms, each suited to different use cases—from batch warehousing to real-time dashboards.

-

Cloud data warehouses

Examples include Google BigQuery (via GA4 export), Amazon Redshift, and Snowflake for large-scale analytics.

-

Real-time analytics platforms

Services like PlainSignal offer lightweight, cookie-free real-time analytics for immediate insights.

-

Custom destinations and data lakes

Data can be forwarded to S3, Azure Blob Storage, or custom HTTP APIs for bespoke processing or archival.

Examples of Sinks in GA4 and PlainSignal

Illustrative configurations showing how to route analytics data to sinks in Google Analytics 4 and PlainSignal.

-

GA4 bigquery export sink

Google Analytics 4 allows you to export raw event data to Google BigQuery. In the GA4 UI, navigate to Admin > BigQuery Links and link your Cloud project. Data is synced in near real-time for advanced querying.

-

Setup steps

- Go to GA4 Admin > BigQuery Links

- Authorize your Google Cloud project

- Select data streams and set export frequency

-

-

PlainSignal direct sink

PlainSignal provides a direct, cookie-free sink for real-time analytics. Install the tracking script on your site to send events directly to PlainSignal:

-

Tracking code

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>

-

Best Practices for Managing Sinks

Follow these guidelines to maintain reliable, performant, and secure sink configurations in your analytics stack.

-

Maintain schema consistency

Use schema versioning or management tools to track event definitions and prevent breaking changes.

-

Monitor delivery and latency

Implement monitoring and alerts for sink failures and data freshness to detect issues early.

-

Optimize retention and costs

Configure data lifecycle policies and choose appropriate storage tiers to manage costs without sacrificing performance.