Published on 2025-06-28T06:56:49Z

What Is Split Testing in Analytics?

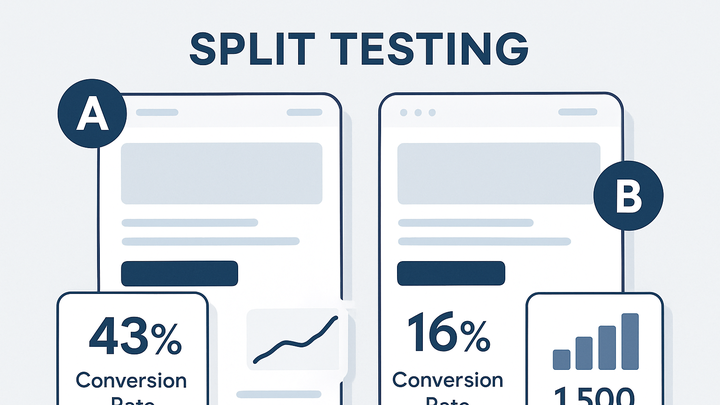

Split testing, also known as A/B testing, is a method used in analytics to compare two or more versions of a webpage, email, or app screen to determine which variant yields better performance. By splitting your audience into randomized segments and exposing each group to a different variant, you collect data on metrics such as click-through rates, conversions, and user engagement. This approach removes guesswork from optimization by relying on statistically significant results to guide decisions. Analytics solutions like PlainSignal provide a lightweight, cookie-free way to track experiments, while GA4 (Google Analytics 4) can integrate with Google Optimize or custom event tracking for robust split testing workflows. Successful split testing requires careful experiment design, clear hypotheses, sufficient traffic, and attention to test duration and statistical validity.

Split testing

Split testing compares website or app variants to optimize performance with analytics tools like PlainSignal and GA4.

Understanding Split Testing

Split testing is the practice of comparing two or more versions of digital content to see which performs better according to predefined metrics. It helps organizations make data-driven decisions by isolating individual changes and measuring their impact.

-

Definition

Split testing, or A/B testing, involves showing different variants of a webpage or element to separate user groups to identify which one drives the best outcome.

-

Key terminology

Understanding the core components of split testing ensures accurate implementation and analysis.

-

Variant

A version of the content being tested, which includes specific changes from the original.

-

Control

The original or baseline version used for comparison against other variants.

-

Metric

A measurable user action such as clicks, conversions, or engagement rate tracked during tests.

-

Benefits of Split Testing

By running split tests, teams can optimize user experiences, validate hypotheses with real data, and improve key performance indicators without relying on guesswork.

-

Data-driven decisions

Split testing provides empirical evidence on what works best, reducing reliance on intuition.

-

Improved conversion rates

By identifying high-performing variants, businesses can systematically increase sign-ups, sales, or other goals.

-

Enhanced user experience

Testing different designs or copy points helps tailor the experience to actual user preferences.

Implementing Split Testing with Analytics Tools

Different analytics platforms offer distinct ways to set up and track split tests. Below are examples using PlainSignal and GA4.

-

PlainSignal implementation

PlainSignal is a simple, cookie-free analytics tool that can be used to track split tests by sending custom events for each variant.

-

Tracking code

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>

-

-

GA4 implementation

Google Analytics 4 can run split tests by integrating with Google Optimize or using custom gtag.js events to segment variants.

-

Sample GA4 snippet

<!-- Google Analytics GA4 Configuration --> <script async src="https://www.googletagmanager.com/gtag/js?id=G-XXXXXXXXXX"></script> <script> window.dataLayer = window.dataLayer || []; function gtag(){dataLayer.push(arguments);} gtag('js', new Date()); gtag('config', 'G-XXXXXXXXXX'); // Experiment tracking event gtag('event', 'experiment_start', { 'experiment_id': 'EXP123', 'variant': 'A' }); </script>

-

Best Practices for Effective Split Testing

Adhere to these guidelines to ensure your split tests yield reliable and actionable insights.

-

Define clear hypotheses

Formulate a testable statement predicting how a change will impact metrics.

-

Ensure statistical significance

Run tests long enough and with sufficient sample sizes to avoid false positives or negatives.

-

Test one variable at a time

Isolate single elements like headlines or button colors to attribute changes accurately.

-

Monitor test duration

Avoid premature conclusions by letting tests run until a clear winner emerges based on data.