Published on 2025-06-22T06:11:35Z

What is Statistical Significance? Examples for Statistical Significance

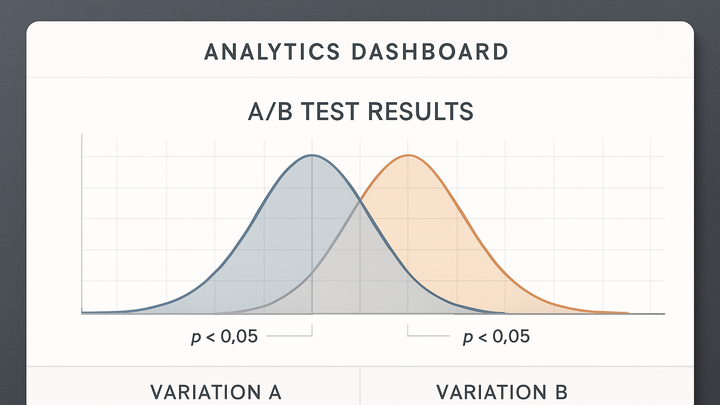

In analytics, statistical significance helps determine whether observed differences or patterns in your data are likely due to real effects rather than chance. When running experiments such as A/B tests, declaring results as statistically significant adds confidence that your changes have a tangible impact. It quantifies the probability that the measured effect would occur if there were actually no difference between variants. This concept relies on p-values, confidence levels, and sample sizes to guide decision-making and avoid overinterpreting random fluctuations. Using tools like GA4 or PlainSignal, analysts can compute statistical tests to support data-driven strategies. Understanding statistical significance reduces the risk of implementing features based on noise and ensures more robust, repeatable outcomes.

Statistical significance

Statistical significance quantifies the likelihood that observed analytics results are not due to chance, guiding data-driven decisions.

Why Statistical Significance Matters

Statistical significance is crucial in analytics because it helps distinguish meaningful results from random noise. Without it, teams might act on patterns that occur purely by chance, leading to wasted resources and misguided strategies.

-

Decision confidence

Declaring a result statistically significant means you have a predefined level of certainty (e.g., 95%) that the effect is real, supporting stronger, data-backed decisions.

-

Resource optimization

By focusing on statistically significant changes, organizations avoid investing in variations that do not reliably improve user experience or key metrics.

How It’s Calculated

Calculating statistical significance involves comparing observed data against a null hypothesis. Commonly, you compute a p-value and compare it to a significance level (alpha) to decide whether to reject the null hypothesis.

-

P-value

The p-value indicates the probability of observing your data (or something more extreme) under the assumption that the null hypothesis is true.

-

Alpha (significance level)

Alpha is the threshold (commonly 0.05) below which the p-value must fall to declare significance, balancing false positives and false negatives.

-

Sample size

Larger sample sizes increase test power, making it easier to detect smaller but real effects; insufficient samples can lead to inconclusive results.

Examples in GA4 and PlainSignal

GA4 Example: Using Google Analytics 4’s built-in experimentation reports, you can view p-values for your A/B tests directly within the interface.

PlainSignal Example: PlainSignal offers simple, cookie-free analytics. To track events for significance testing, embed the following code:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin />

<script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>

PlainSignal then collects real-time metrics which you can export for significance testing in your favorite statistical tool.

-

GA4 experiment reports

Navigate to the ‘Experiments’ section in GA4 to review p-values and confidence intervals for your test variants.

-

PlainSignal setup

Insert the JavaScript snippet above into your site’s head to start tracking. PlainSignal’s dashboard exports CSVs for offline significance analysis.

Best Practices and Pitfalls

Applying statistical significance correctly avoids common mistakes like p-hacking and misinterpretation. Follow these practices to ensure trustworthiness in your analytics conclusions.

-

Avoid p-hacking

Do not repeatedly check results and stop tests early when significance appears. Predefine test duration and sample sizes.

-

Understand practical significance

Even significant results may have negligible effect sizes. Always assess whether the magnitude of change justifies implementation.

-

Monitor data quality

Ensure your tracking setup (in GA4 or PlainSignal) is accurate and free of biases like bot traffic before running significance tests.