Published on 2025-06-27T21:40:33Z

What is the Data Lifecycle? Examples and Stages

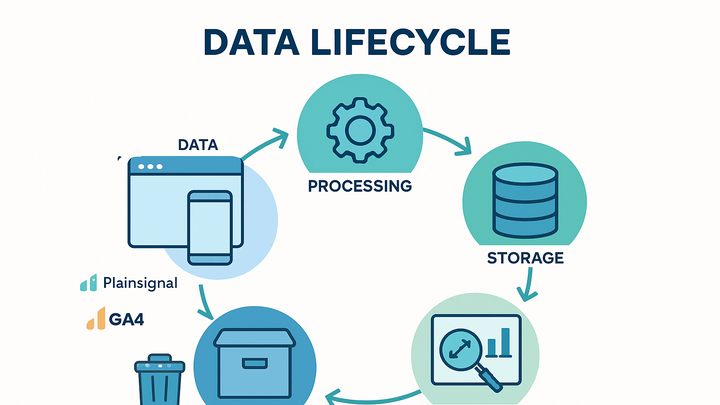

Data Lifecycle refers to the series of stages through which data passes from its initial capture to its final disposal. In the analytics industry, it encompasses six primary phases: Collection, Processing, Storage, Analysis, Visualization, and Archiving & Deletion. Proper lifecycle management improves data quality, ensures compliance with regulations like GDPR and CCPA, optimizes storage costs, and builds trust in your insights. Key tools such as PlainSignal—a lightweight, cookie-free analytics platform—and Google Analytics 4 (GA4) showcase how modern technologies can streamline these stages. This article explores each phase in detail, provides SaaS implementation examples with real code snippets, and discusses best practices, challenges, and future trends. It also highlights how integrating privacy-first analytics solutions can safeguard user data at every step.

Data lifecycle

Overview of the Data Lifecycle in analytics: stages from collection to deletion, implementation examples with PlainSignal and GA4.

Key Stages of the Data Lifecycle

The Data Lifecycle in analytics progresses through a series of structured stages that transform raw user interactions into actionable insights and, eventually, into archived or deleted records. Each stage serves a specific purpose and requires tailored tools and practices to ensure data reliability, privacy, and efficiency.

-

Data collection

This stage captures raw digital interactions, events, and user behaviors from websites, mobile apps, and other touchpoints. It lays the foundation for all subsequent analysis by ensuring comprehensive and accurate data capture.

-

PlainSignal (cookie-free analytics)

PlainSignal provides simple, privacy-first tracking without cookies. Implement it by adding this snippet:

<link rel='preconnect' href='//eu.plainsignal.com/' crossorigin /> <script defer data-do='yourwebsitedomain.com' data-id='0GQV1xmtzQQ' data-api='//eu.plainsignal.com' src='//cdn.plainsignal.com/plainsignal-min.js'></script> -

Google analytics 4 (GA4)

GA4 is Google’s next-generation analytics platform that unifies web and app measurement. Initialize it with:

<script async src='https://www.googletagmanager.com/gtag/js?id=GA_MEASUREMENT_ID'></script> <script> window.dataLayer = window.dataLayer || []; function gtag(){dataLayer.push(arguments);} gtag('js', new Date()); gtag('config', 'GA_MEASUREMENT_ID'); </script>

-

-

Data processing

Raw data is cleansed, transformed, and enriched to prepare it for storage and analysis. Common tasks include deduplication, normalization, and applying business logic.

-

Etl/elt pipelines

Use extract-transform-load (ETL) or extract-load-transform (ELT) frameworks such as Apache Airflow, Fivetran, or AWS Glue to automate data transformations.

-

Real-time stream processing

Employ streaming technologies like Apache Kafka, AWS Kinesis, or Google Pub/Sub for near-instant data processing and enrichment.

-

-

Data storage

Processed data is persisted in structured or unstructured repositories based on access patterns and query requirements. Common storage solutions range from relational databases to data warehouses and lakes.

-

Data warehouses

Centralized repositories optimized for analytical queries, such as Amazon Redshift, Google BigQuery, or Snowflake.

-

Data lakes

Schema-on-read storage for vast volumes of raw or semi-structured data using platforms like AWS S3 or Azure Data Lake Storage.

-

-

Data analysis

At this stage, analysts and data scientists run queries, statistical models, and machine-learning algorithms to identify patterns, correlations, and predictions.

-

Sql querying

Perform exploratory analysis and aggregations using SQL engines within your data warehouse or lakehouse.

-

Machine learning

Build, train, and deploy predictive models and advanced analytics using platforms like TensorFlow, PyTorch, or integrated ML services.

-

-

Data visualization

Insights are communicated through dashboards, reports, and interactive visualizations to drive decision-making across teams and stakeholders.

-

Dashboards

Use BI tools like Looker, Tableau, or Power BI to create real-time, interactive dashboards.

-

Reporting

Generate scheduled reports and share key metrics via email or embedded portals.

-

-

Data archiving & deletion

Organizations implement policies to archive infrequently accessed data and securely delete obsolete records in compliance with data retention regulations.

-

Archiving

Transition cold data to low-cost, long-term storage such as Amazon S3 Glacier or Google Cloud Archive.

-

Deletion policies

Define and enforce retention schedules to automatically purge data in line with GDPR, CCPA, and internal governance requirements.

-

Why Data Lifecycle Matters in Analytics

Managing the Data Lifecycle ensures that organizations maintain high data quality, comply with regulations, control costs, and derive reliable insights. By understanding each phase, teams can implement targeted optimizations and governance.

-

Data quality and consistency

A structured lifecycle establishes validation checks, transformations, and governance rules to minimize errors and discrepancies in datasets.

-

Regulatory compliance

Lifecycle policies for data retention, archiving, and deletion help organizations meet GDPR, CCPA, and other legal requirements.

-

Cost efficiency

Implementing tiered storage and archiving strategies optimizes infrastructure costs by aligning data value with storage expenses.

Implementing Data Lifecycle Management with SaaS Tools

SaaS analytics platforms can automate and streamline various stages of the Data Lifecycle. Below are examples of how PlainSignal and GA4 support different phases and features to consider.

-

PlainSignal for privacy-first data collection

PlainSignal specializes in the initial data collection stage, offering a lightweight, cookie-free script that prioritizes user privacy and requires minimal configuration.

-

Supported stage

Data Collection

-

Key features

Cookie-free tracking, real-time insights, GDPR compliance

-

-

Google analytics 4 for end-to-end analytics

GA4 provides comprehensive lifecycle support from data ingestion to analysis and visualization. Its native integration with BigQuery enables advanced querying and machine learning workflows.

-

Supported stages

Data Collection, Processing, Storage, Analysis, Visualization

-

Integration highlights

Automatic BigQuery export, cross-platform measurement, event-driven data model

-

Best Practices and Common Challenges

Adopting best practices and anticipating challenges helps ensure a robust Data Lifecycle. Key considerations revolve around governance, automation, and privacy.

-

Implement robust data governance

Define clear policies, roles, and workflows for data ownership, quality standards, and access controls across the lifecycle.

-

Data policies

Document data definitions, retention rules, and access permissions.

-

Stewardship roles

Assign data owners and custodians to maintain accountability.

-

-

Automate data workflows

Leverage ETL/ELT orchestration, scheduling, and monitoring to reduce manual errors and improve efficiency.

-

Workflow orchestration

Use platforms like Apache Airflow or Prefect to manage data pipelines.

-

Monitoring and alerting

Set up alerts for pipeline failures, latency issues, and data quality thresholds.

-

-

Ensure privacy and compliance

Incorporate anonymization, consent management, and policy-driven retention to protect user data and meet regulatory standards.

-

Anonymization techniques

Apply hashing, tokenization, or aggregation to remove or obfuscate PII.

-

Consent management

Track and enforce user consent preferences throughout data collection and processing.

-

Future Trends in Data Lifecycle Management

Emerging technologies and evolving regulations are shaping the next generation of Data Lifecycle solutions. Staying informed on these trends can give organizations a competitive edge.

-

Real-time and streaming analytics

Demand for instant insights is driving adoption of stream-processing architectures and event-driven pipelines.

-

Streaming platforms

Platforms like Apache Kafka, AWS Kinesis, and Google Pub/Sub enable continuous data flows.

-

-

Ai-driven lifecycle automation

Machine learning models automate tasks such as anomaly detection, data classification, and lifecycle optimizations.

-

Intelligent classification

Use AI to automatically tag and categorize incoming data for targeted workflows.

-

-

Privacy-first data architectures

Design systems that minimize data collection, enforce anonymization by default, and give users granular control over their information.