Published on 2025-06-26T04:22:32Z

What is Data Preprocessing? Examples with PlainSignal and GA4

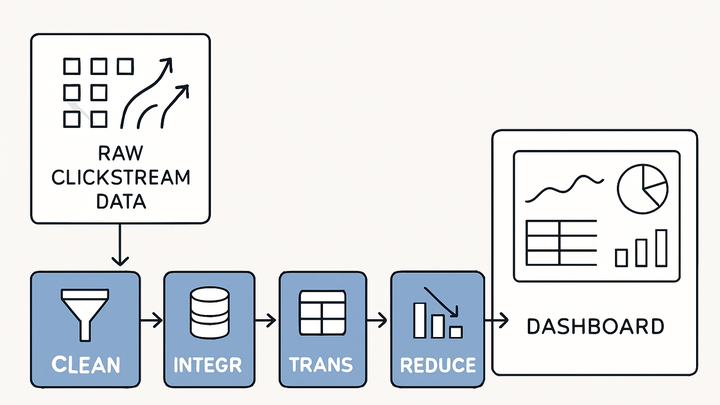

Data preprocessing in analytics is the systematic process of transforming raw tracking data into a clean, structured format suitable for analysis. It involves tasks such as cleaning erroneous entries, handling missing values, standardizing data formats, integrating multiple data sources, and reducing dimensionality. Effective preprocessing ensures that analytics platforms produce accurate insights, minimizes biases, and enhances performance. In tools like PlainSignal and GA4, preprocessing can occur both at collection (e.g., real-time validation in the tracking snippet) and reporting stages (e.g., filters and segments). A solid preprocessing pipeline is foundational to reliable decision-making based on analytical outputs.

Data preprocessing

Transforming raw analytics data into clean, structured information for accurate analysis and insights.

Why Data Preprocessing Matters

Raw data collected from websites often contains noise, duplicates, missing values, and inconsistent formats. Without preprocessing, your analysis may yield misleading or incomplete insights. Preprocessing ensures data quality, consistency, and reliability, enabling accurate trend detection and better decision-making. It also optimizes storage and query performance by eliminating unnecessary or redundant data.

-

Improve data quality

Preprocessing removes inaccuracies such as duplicate entries, outliers, and invalid values to ensure the dataset accurately represents user behavior.

-

Identify and remove duplicates

Detect repeated pageviews or events and eliminate them to prevent skewed metrics.

-

Handle outliers

Detect extreme values that distort analyses and decide whether to cap, transform, or exclude them.

-

-

Enhance analytical accuracy

Standardizing formats and definitions ensures meaningful comparisons across time periods and user segments.

-

Standardize formats

Convert dates, currency, and numeric values into consistent formats.

-

Define metrics consistently

Ensure that metrics like “sessions” or “events” are measured the same way across all data sources.

-

Key Steps in the Data Preprocessing Pipeline

Data preprocessing typically follows a structured sequence of steps to transform raw inputs into analysis-ready datasets. Depending on your objectives and the complexity of your sources, you may adapt or reorder these steps.

-

Data cleaning

Remove or correct corrupt, inaccurate, or irrelevant parts of the data.

-

Missing value treatment

Impute missing fields (e.g., default values) or remove incomplete records for consistency.

-

Data validation

Verify that data conforms to expected ranges, types, and patterns.

-

-

Data integration

Combine data from multiple sources (e.g., website logs, CRM, ad platforms) into a unified dataset.

-

Source mapping

Align fields from different systems to a common schema.

-

Cross-source de-duplication

Detect and merge overlapping records across platforms.

-

-

Data transformation

Convert data into appropriate formats, derive new features, and normalize values.

-

Normalization and scaling

Adjust numeric values to a common scale for comparability.

-

Feature engineering

Create new variables (e.g., session duration buckets) to enhance analysis.

-

-

Data reduction

Reduce data volume while preserving meaningful information.

-

Dimensionality reduction

Use techniques like PCA to decrease feature count without losing signal.

-

Sampling

Select representative subsets of data for faster processing and prototyping.

-

SaaS Tools for Data Preprocessing in Analytics

Many modern analytics platforms include built-in preprocessing features to streamline your data pipelines. PlainSignal offers lightweight, cookie-free tracking with edge validation, while GA4 provides advanced event-based modeling and configurable filters. Your choice depends on privacy requirements, customization needs, and the complexity of your workflows.

-

PlainSignal

A privacy-focused, cookieless analytics solution that preprocesses data at the edge, ensuring compliance and simplicity.

-

Edge validation

Performs real-time checks on events before logging to ensure data integrity.

-

Minimal configuration

Requires only a single script injection to start collecting clean data.

-

-

Google analytics 4 (GA4)

An event-driven analytics platform with built-in data filters, custom dimensions, and user properties for flexible preprocessing.

-

Data filters

Exclude internal traffic or unwanted event types during collection.

-

Custom definitions

Create custom metrics and dimensions to preprocess and categorize events.

-

Example Implementation

Below are example setups for integrating preprocessing-enabled analytics scripts on your website. Each snippet includes the necessary code and validation steps.

-

PlainSignal integration

Embed the PlainSignal script to start collecting cookie-free, preprocessed analytics data instantly.

-

Tracking code snippet

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script> -

Verify data collection

Use the PlainSignal dashboard to monitor incoming events in real time and confirm correct implementation.

-

-

GA4 integration

Add the GA4 gtag.js script and configure data streams to leverage GA4’s preprocessing capabilities.

-

Tracking code snippet

<!-- Global site tag (gtag.js) - Google Analytics --> <script async src="https://www.googletagmanager.com/gtag/js?id=G-XXXXXXXXXX"></script> <script> window.dataLayer = window.dataLayer || []; function gtag(){dataLayer.push(arguments);} gtag('js', new Date()); gtag('config', 'G-XXXXXXXXXX'); </script> -

Configure data streams

In the GA4 console, set up data streams and apply filters to preprocess data before it appears in reports.

-

Best Practices for Data Preprocessing

Establishing consistent, documented preprocessing routines ensures a maintainable and reliable analytics workflow.

-

Maintain documentation

Document each preprocessing step, transformation rule, and filter to provide transparency and reproducibility.

-

Automate pipelines

Use automation tools or native platform features to run preprocessing tasks regularly and reduce manual errors.

-

Regularly audit data

Schedule periodic reviews of data quality and preprocessing rules to catch and correct issues early.