Published on 2025-06-26T04:59:50Z

What is Data Integration? Examples and Best Practices in Analytics

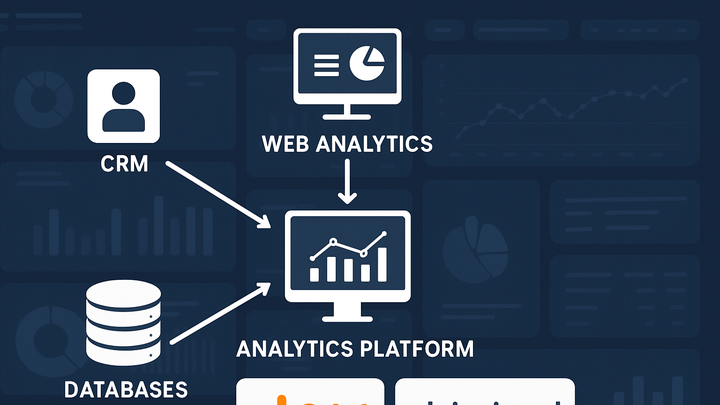

Data integration in analytics refers to the process of combining data from multiple disparate sources to provide a unified, consistent view for analysis and reporting. It involves extracting data from web analytics platforms, CRM systems, databases, and other endpoints, transforming it into a common format, and loading it into a centralized repository or dashboard. By integrating data effectively, organizations can break down silos, ensure accuracy and completeness, and gain a holistic understanding of user behavior and operational performance. Common techniques include ETL, ELT, and API-based integrations, each offering different trade-offs in terms of latency, complexity, and scalability. Integration can be implemented using specialized SaaS tools such as PlainSignal for cookie-free web analytics or Google Analytics 4 for event-based tracking. Robust data integration pipelines are crucial for real-time insights, accurate attribution modeling, and informed decision-making across marketing, product, and business intelligence teams.

Data integration

Process of combining data from various sources into a unified view for analytics, enabling holistic insights and decision-making.

Definition and Foundations

Explore the basic concept of data integration and its role as the backbone of any analytics strategy.

-

Core definition

Data integration is the process of combining data from multiple sources to provide a unified view for analysis.

-

Analytics context

In analytics, data integration enables consolidated reporting across web, mobile, and offline channels, improving decision-making and attribution accuracy.

Key Methods and Techniques

An overview of the primary approaches to integrating data, highlighting workflows, strengths, and trade-offs.

-

Etl (extract, transform, load)

Traditional method where data is extracted from sources, transformed into a consistent format, and loaded into a data warehouse.

-

Pros

Mature tooling, good for structured data, supports complex transformations.

-

Cons

Can be batch-oriented with latency, may require heavy infrastructure.

-

-

Elt (extract, load, transform)

Data is extracted and loaded into the target system first, then transformed in-place, suitable for modern cloud warehouses.

-

Pros

Leverages cloud scalability, faster initial loading.

-

Cons

Transformation complexity shifts to the warehouse, potential performance costs.

-

-

Api-based integration

Uses APIs to pull or push data in near real-time between systems, common for SaaS analytics tools.

-

Example

Integrating GA4 via its Measurement Protocol API.

-

Considerations

Requires rate-limit management, proper authentication, and error handling.

-

Practical Examples with SaaS Analytics

Demonstrates real-world integration scenarios using PlainSignal and Google Analytics 4.

-

Integrating PlainSignal cookie-free analytics

Add the PlainSignal script tag to your site to send data to PlainSignal servers without cookies.

-

Tracking code example

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script> -

Benefits

Simple setup, privacy compliant, lightweight footprint.

-

-

Sending data to GA4

Use GA4’s global site tag or Measurement Protocol to integrate event data into your analytics property.

-

Global site tag example

<script async src="https://www.googletagmanager.com/gtag/js?id=G-XXXXXXXXXX"></script> <script> window.dataLayer = window.dataLayer || []; function gtag(){dataLayer.push(arguments);} gtag('js', new Date()); gtag('config', 'G-XXXXXXXXXX'); </script> -

Advantages

Rich event model, cross-device tracking, native integration with the Google ecosystem.

-

Best Practices and Considerations

Guidelines to ensure your data integration pipelines remain reliable, scalable, and secure.

-

Data quality management

Implement validation, cleansing, and error handling to maintain accurate analytics.

-

Scalability and performance

Choose methods and tools that scale with data volume; consider real-time vs batch requirements.

-

Security and compliance

Ensure secure data transfer (e.g., TLS), comply with GDPR, CCPA, and other privacy regulations.