Published on 2025-06-28T02:10:59Z

What is ETL (Extract, Transform, Load)? Examples with PlainSignal & GA4

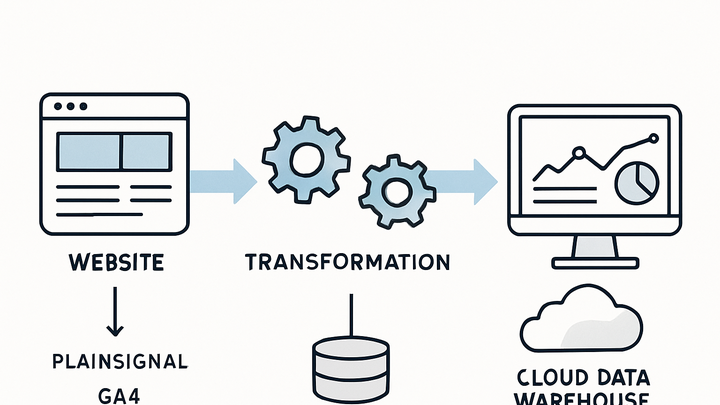

ETL (Extract, Transform, Load) is a fundamental process in analytics for consolidating disparate data into a unified repository. It begins by extracting raw data from various sources—such as web tracking scripts (PlainSignal, GA4), databases, or third-party APIs. During transformation, the data is cleansed, normalized, and enriched to ensure consistency and usability for reporting. Finally, the cleaned data is loaded into data warehouses or analytics platforms, enabling teams to query and visualize insights effectively. Modern analytics pipelines often leverage cloud-based ETL tools to automate and scale these tasks, balancing performance, cost, and regulatory compliance. Understanding each step is crucial for building reliable, maintainable, and efficient data workflows.

Etl (extract transform load)

ETL in analytics extracts data from sources, transforms it for consistency, and loads it into platforms like PlainSignal or GA4 for reporting.

Key Components of ETL

ETL consists of three main steps: extracting data from source systems, transforming it to fit operational needs, and loading it into a destination system. In analytics, ETL pipelines enable teams to consolidate data for reporting and insights.

-

Extract

This step involves retrieving raw data from various sources such as websites, databases, or applications.

-

Source variety

Web tracking (e.g., PlainSignal, GA4), CRM systems, databases, and logs.

-

Extraction methods

Batch extraction or real-time streaming to capture event data.

-

-

Transform

Data is cleaned, enriched, and transformed to match schema requirements of the target analytics platform.

-

Cleaning

Deduplicating records, handling missing values, and normalizing formats.

-

Enrichment

Adding geographic, temporal, or user segmentation attributes.

-

-

Load

Processed data is loaded into data warehouses, analytics tools, or dashboards for analysis.

-

Loading modes

Full loads for initial migrations; incremental loads for ongoing updates.

-

Destinations

Cloud warehouses (BigQuery, Redshift), analytics platforms (PlainSignal, GA4).

-

ETL vs ELT

While ETL transforms data before loading it into the target system, ELT pushes raw data first and transforms it within the destination. Each approach has trade-offs in terms of performance, cost, and complexity.

-

Etl architecture

Transformation occurs before loading, ensuring the target only receives clean, formatted data.

-

Pros

Better control over data quality; reduces processing load on the destination.

-

Cons

Requires separate transformation infrastructure; longer time to insight.

-

-

Elt architecture

Raw data is loaded first; transformations happen inside the target platform using its compute resources.

-

Pros

Simpler pipeline structure; fast loading leveraging scalable cloud compute.

-

Cons

Potentially higher compute costs; requires powerful target system for complex transformations.

-

ETL Tools and SaaS Products in Analytics

A variety of ETL tools cater to analytics needs, ranging from lightweight solutions to enterprise-scale platforms. Here’s how PlainSignal and Google Analytics 4 fit into ETL workflows.

-

PlainSignal

Cookie-free, privacy-first analytics that can act as both a data source and a destination in ETL pipelines.

-

Priority

High – Ideal for teams needing lightweight, compliant data extraction.

-

Integration example

Add this tracking snippet to extract web events directly:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script> -

Loading with etl

Use the PlainSignal REST API to pull extracted events, transform JSON payloads, then load them into your data warehouse.

-

-

Google analytics 4 (GA4)

A widely-used analytics platform with native ETL capabilities through BigQuery export.

-

Priority

Medium – Robust feature set but may require consent management and additional setup.

-

Integration example

Enable BigQuery export under Admin > Data Streams to load raw event data into your project.

-

Transform & load

Use SQL in BigQuery to transform exported event tables, then load processed data into BI tools or dashboards.

-

Best Practices for ETL in Analytics

To ensure reliable and efficient ETL processes, follow these best practices spanning data quality, monitoring, and compliance.

-

Maintain data quality

Implement validation rules and alerts to detect anomalies early.

-

Schema validation

Enforce schema checks to catch unexpected fields or data types.

-

Data profiling

Regularly profile data to understand distributions and identify outliers.

-

-

Monitor and log pipelines

Set up comprehensive logging to track pipeline health and performance metrics.

-

Error alerts

Automate notifications for pipeline failures to enable rapid response.

-

Performance metrics

Monitor latency and throughput to optimize resource allocation.

-

-

Ensure security and compliance

Secure data throughout the ETL process and comply with relevant regulations.

-

Access controls

Use role-based permissions to restrict data access.

-

Data anonymization

Remove or mask personally identifiable information (PII) to protect user privacy.

-