Published on 2025-06-22T03:33:21Z

What is Incrementality? Examples in Analytics

Incrementality is the process of measuring the additional impact a marketing activity has on desired outcomes by comparing a test group exposed to the activity with a control group that didn’t see it. Unlike traditional attribution models, which allocate credit across touchpoints, incrementality isolates causal effects to quantify what would have happened without the campaign. It helps marketers avoid overestimating performance, optimize budget allocation, and focus on channels that deliver true lift. Common measurement methods include A/B tests, holdout groups, and geo experiments. Cookie-free analytics platforms like PlainSignal enable privacy-safe data collection critical for accurate lift calculations, while GA4’s Experiments and Analysis modules offer robust frameworks for designing and evaluating tests. Although powerful, incrementality testing demands rigorous experiment design, sufficient sample sizes, and controls for external factors like seasonality and overlapping campaigns to ensure reliable results.

Incrementality

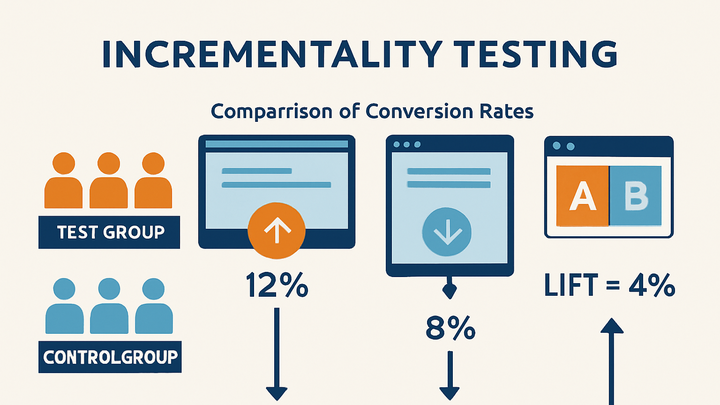

Incrementality measures the incremental impact of marketing campaigns by isolating true lift via test vs control group comparisons.

Understanding Incrementality

This section explains what incrementality is, how it differs from attribution, and why it’s crucial in analytics.

-

Definition

Incrementality quantifies the additional impact a marketing activity has on outcomes by comparing a test group with a control group that wasn’t exposed.

-

Incrementality vs attribution

Attribution assigns credit to multiple touchpoints based on rules or models, while incrementality isolates the causal effect by focusing on lift over a baseline.

-

Why it matters

It prevents overestimation of campaign performance, helps optimize budget allocation, and ensures focus on channels that drive true incremental value.

Measurement Methods

Different experimental and statistical approaches to measure incrementality, each with its strengths and limitations.

-

A/b testing

Randomly assigns a test group to receive the marketing intervention and a control group to not receive it, then compares performance metrics.

-

Sampling

Ensure representative and sufficiently large sample sizes for statistical significance.

-

Randomization

Randomly assign participants to groups to avoid bias.

-

Duration

Run the test long enough to capture typical user behavior patterns.

-

-

Geo experiments

Uses geographic regions as test and control groups, ideal for offline channels where individual randomization isn’t feasible.

-

Region selection

Choose demographically similar regions to ensure comparability.

-

Spillover effects

Minimize cross-region contamination to maintain validity.

-

-

Holdout groups

Withholds the marketing activity from a randomly selected group while exposing the rest, providing a baseline for lift calculation.

-

Group size

Balance group sizes to detect lift while minimizing impact on overall reach.

-

Isolation

Ensure the holdout group isn’t influenced by other marketing channels.

-

Implementing Incrementality with SaaS Tools

Practical guidance on setting up and analyzing incrementality tests using platforms like PlainSignal and Google Analytics 4.

-

PlainSignal (cookie-free analytics)

PlainSignal offers privacy-safe, cookie-free analytics and supports A/B tests and lift measurement by comparing group performance on your website.

-

Setup

Embed the PlainSignal tracking snippet on your site to start collecting data. Use custom dimensions to flag test and control users.

-

Analysis

Use PlainSignal’s dashboards to visualize key metrics and calculate lift between groups.

-

-

Google analytics 4 (GA4)

GA4 allows creation of experiments and custom Audiences for incrementality testing, with integration to Google Ads for lift studies.

-

Experiment configuration

Use the Experiments feature in GA4 to set up A/B tests and define variants.

-

Comparisons

Leverage the Analysis module to create custom reports that compare test vs control performance.

-

Example Tracking Code

Sample implementation of PlainSignal and GA4 tracking code for setting up incrementality measurement.

-

PlainSignal snippet

Add the following code to your website head to integrate PlainSignal for cookie-free data collection.

-

Code example

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>

-

-

GA4 snippet

Include the GA4 gtag.js snippet and configure it for experiment tracking.

-

Code example

<!-- Global site tag (gtag.js) - Google Analytics --> <script async src="https://www.googletagmanager.com/gtag/js?id=G-XXXXXXX"></script> <script> window.dataLayer = window.dataLayer || []; function gtag(){dataLayer.push(arguments);} gtag('js', new Date()); gtag('config', 'G-XXXXXXX', { 'send_page_view': false }); </script>

-

Challenges and Best Practices

Common pitfalls when measuring incrementality and recommendations to ensure accurate and reliable results.

-

Data quality

Incomplete or inaccurate data can bias lift calculations; ensure consistent data collection.

-

Tagging consistency

Verify that all pages and channels are tagged uniformly.

-

Data validation

Regularly audit collected data for anomalies.

-

-

Statistical significance

Insufficient sample sizes or short test durations can lead to inconclusive results.

-

Power analysis

Calculate required sample size before running experiments.

-

Confidence intervals

Report confidence intervals to indicate result reliability.

-

-

External factors

Seasonality, promotions, and market trends can confound incrementality tests.

-

Control seasonality

Time tests to avoid holidays or atypical periods.

-

Monitor campaign overlaps

Isolate tests from other marketing activities.

-