Published on 2025-06-22T04:49:40Z

What is Recall? Examples for Recall in Analytics

Recall in analytics refers to the proportion of actual positive instances that a predictive model correctly identifies. It originates from information retrieval and is also known as sensitivity or true positive rate. A high recall means the model captures most of the relevant cases, making it crucial in scenarios where missing a positive is costly. Conversely, it does not account for false positives, so it is often balanced with precision. In web analytics, you can use tools like GA4 and PlainSignal to collect event data, export it for machine learning, and measure recall to evaluate models such as churn prediction or fraud detection. By understanding recall, analysts can tune models to ensure they are both effective and aligned with business priorities.

Recall

Recall measures the proportion of actual positives correctly identified by a model, essential for evaluating classification performance.

Why Recall Matters

Recall, also known as sensitivity or true positive rate, measures a model’s ability to identify all relevant cases in a dataset. It is crucial when missing positive instances carries significant consequences, such as in fraud detection or medical diagnosis. While a high recall ensures that most positive cases are captured, it does not address false positives, which is why it’s often balanced with precision. In marketing analytics, for example, understanding recall can help ensure that targeted campaigns reach the intended audience. In churn prediction, a model with high recall will correctly flag more at-risk customers for retention efforts.

-

Use cases

Recall is vital in domains where false negatives are costly:

-

Churn prediction

Identifying and flagging customers likely to leave to enable proactive retention strategies.

-

Fraud detection

Catching as many fraudulent transactions as possible to minimize financial losses.

-

Spam filtering

Filtering out spam emails so that unwanted messages are not delivered to the user’s inbox.

-

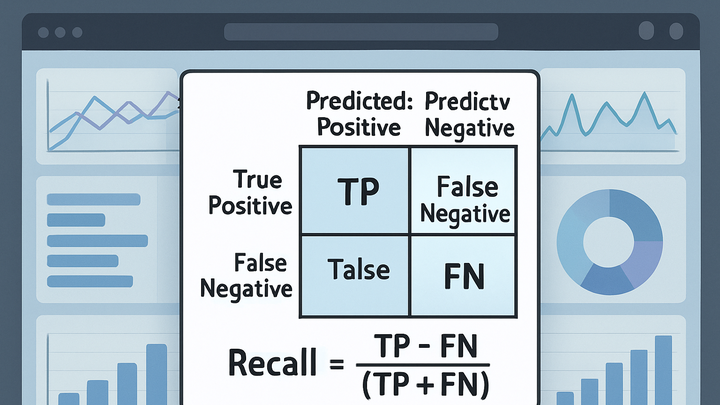

Calculating Recall

Recall is derived from the confusion matrix and expresses the ratio of true positive predictions to all actual positives. It’s a key metric in classification tasks, and its complementary counterpart is precision. Optimizing recall often involves trade-offs with precision, so understanding this metric helps analysts make informed decisions.

-

True positives (tp)

Instances where the model correctly predicts the positive class.

-

False negatives (fn)

Instances where the model incorrectly predicts the negative class for actual positives.

-

Recall formula

Recall = TP / (TP + FN)

For example, if a model detects 80 actual positives out of 100, Recall = 80⁄100 = 0.8 (80%).

Example with GA4 and PlainSignal

You can leverage GA4 and PlainSignal to collect user event data, train a classification model, and evaluate recall for tasks like churn prediction. Here’s a high-level workflow:

-

Exporting data from GA4

Link your GA4 property to BigQuery to export raw event data. Use SQL to transform events into features such as session counts, user engagement metrics, and custom dimensions.

-

Tracking with PlainSignal

Insert PlainSignal’s simple, cookie-free analytics script to collect event data:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script> -

Model training & evaluation

Use a library like Scikit-Learn to train a classification model on your labeled data and compute recall:

-

Python code snippet

from sklearn.metrics import recall_score # y_true: actual labels, y_pred: predicted labels recall = recall_score(y_true, y_pred) print(f"Recall: {recall:.2f}")

-

Improving Recall

Enhancing recall may reduce precision, so choose strategies aligned with your business’s tolerance for false positives. Common approaches include:

-

Threshold tuning

Lower the classification threshold to capture more positives, knowing this may increase false positives.

-

Data augmentation

Increase the number of positive examples through oversampling or synthetic generation to help the model learn rare cases.

-

Cost-sensitive learning

Incorporate higher penalties for false negatives by adjusting class weights or using custom loss functions.