Published on 2025-06-28T06:24:24Z

What is Schema-on-write? Examples in Analytics

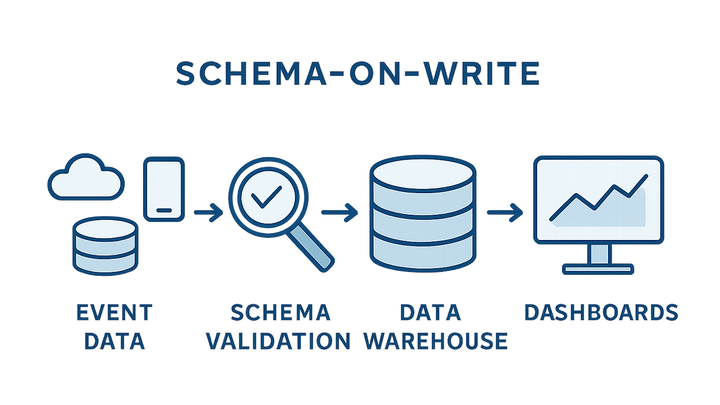

In analytics, schema-on-write is a data ingestion strategy where the schema is defined and enforced at the time of writing data to a storage system. This means that all incoming events or records must conform to a predefined structure, including field names, data types, and constraints, before they are accepted. By validating and structuring data upfront, organizations ensure high data quality, reliable reporting, and fast query performance in their analytics systems. However, this rigidity can slow down the onboarding of new data sources or schema changes, requiring careful planning and version control. Schema-on-write is commonly used in data warehouses, ETL pipelines, and modern analytics platforms such as Google Analytics 4 and PlainSignal. It contrasts with schema-on-read, where structure is applied later during data retrieval. Understanding when to use schema-on-write helps analytics teams balance performance, consistency, and flexibility.

Schema-on-write

Schema-on-write enforces a predefined data structure at ingestion, ensuring quality and performance in analytics platforms like GA4 and PlainSignal.

Understanding Schema-on-write

Schema-on-write is a paradigm where the data structure (schema) is defined before data enters the system. This upfront modeling ensures that every record matches the expected format, preventing inconsistent or malformed data from being stored. It is a foundational concept for building reliable analytics pipelines that depend on clean, well-organized datasets.

-

Definition

Schema-on-write means data structures are defined and enforced at ingestion time, so only records that match the schema are accepted.

-

Key characteristics

This approach enforces consistency and reliability by validating each record against a predefined model before storage.

-

Predefined schema

The data model is defined in advance, specifying field names, data types, and constraints.

-

Validation at ingestion

Incoming data is checked against the schema, and invalid records are rejected or flagged for review.

-

-

Typical workflow

In an analytics pipeline, data flows through an ETL process where it’s validated, transformed, and then loaded into a structured store.

-

Etl processing

Extract, transform, and load operations adapt raw events to match the defined schema.

-

Data warehouse storage

Validated data is stored in tables or partitions aligned with the schema for efficient querying.

-

Why Schema-on-write Matters

Implementing schema-on-write brings several advantages for analytics teams, from ensuring data quality to speeding up query performance. By enforcing structure early, organizations can build reliable dashboards and reports without worrying about downstream data issues.

-

Data consistency

An enforced schema guarantees that all ingested data follows the same format, reducing anomalies and errors.

-

Consistent types

Fields adhere to specified types like strings, numbers, or timestamps.

-

Error detection

Invalid or malformed records are flagged or rejected before storage.

-

-

Improved query performance

Predefined schemas allow databases to optimize storage layouts and indexing, resulting in faster queries.

-

Indexing

Known column types enable efficient index creation for frequent queries.

-

Partition pruning

Structured tables can be partitioned by keys like date, speeding up range scans.

-

-

Simplified reporting

Report designers can rely on stable table structures, making dashboard creation more straightforward.

Schema-on-write vs Schema-on-read

Choosing between schema-on-write and schema-on-read depends on your priorities: performance and consistency versus flexibility and agility. Understanding both models helps you pick the right approach for your analytics needs.

-

Schema-on-write

Data is structured at load time, enforcing consistency and enabling optimized querying, but requires upfront modeling.

-

Schema-on-read

Data is stored raw, and structure is applied at query time, offering flexibility but possibly slower performance.

-

Choosing the right approach

Consider data volume, query performance requirements, and agility needs when selecting between the two.

-

Use cases for schema-on-write

Large-scale reporting where data consistency and speed are critical.

-

Use cases for schema-on-read

Exploratory analysis, data science, and scenarios where schemas evolve rapidly.

-

Implementing Schema-on-write with SaaS Analytics

Modern analytics platforms like Google Analytics 4 and PlainSignal provide schema-on-write capabilities to help you enforce data models and maintain high-quality datasets.

-

Google analytics 4 (GA4)

GA4 enforces schemas for events and parameters, especially when exporting to BigQuery for downstream analysis.

-

Event parameters schema

Custom event parameters must be defined in advance, ensuring they match expected types and formats.

-

Bigquery export schema

GA4’s export to BigQuery creates structured tables with columns based on your event definitions.

-

-

PlainSignal

PlainSignal applies a lightweight schema-on-write model to capture web metrics without cookies, ensuring privacy and simplicity.

-

Cookie-free data collection

Collect pageviews and events with minimal configuration, complying with privacy regulations.

-

Example tracking code

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script>

-

Best Practices and Considerations

To maximize the benefits of schema-on-write, follow these best practices and plan for schema evolution as your analytics needs grow.

-

Define and version your schemas

Maintain versioned schema definitions to track changes and support backward compatibility.

-

Monitor for schema drift

Regularly audit incoming data to detect deviations that could break your analytics pipelines.

-

Plan for schema evolutions

Design your data model to accommodate future changes without disrupting existing reports or dashboards.

-

Backward compatibility

Add new fields as optional before renaming or removing existing ones.

-

Migration strategies

Use versioned tables or views to roll out schema changes without downtime.

-