Published on 2025-06-27T21:36:11Z

What is Data Ingestion? Examples and Best Practices in Analytics

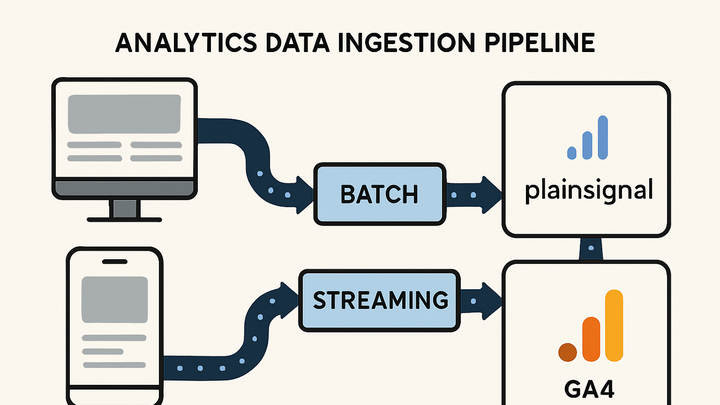

Data ingestion is the process of collecting, importing, and processing raw data from multiple sources into a centralized system for analysis. In analytics, effective data ingestion ensures timely, accurate, and comprehensive data for reporting and decision-making. Depending on business requirements, data ingestion can occur in batches, in near real-time, or as continuous streams. Modern analytics platforms and SaaS tools like PlainSignal and Google Analytics 4 (GA4) simplify ingestion pipelines by providing out-of-the-box scripts and SDKs. Understanding how to configure, scale, and monitor data ingestion workflows is essential for generating reliable insights and improving data-driven strategies.

Data ingestion

Data ingestion collects raw data from various sources and loads it into analytics systems for processing and analysis.

Definition of Data Ingestion

This section introduces what data ingestion means in the context of analytics and why it forms the foundation of reporting and decision-making workflows.

-

Core concept

Data ingestion is the process of gathering raw data from multiple sources—such as websites, mobile apps, databases, and IoT devices—and loading it into a centralized system for analysis.

-

Role in analytics

Effective ingestion ensures that analytics teams have timely, consistent, and accessible data, enabling accurate dashboards, reports, and data-driven insights.

Types of Data Ingestion

Different ingestion methods suit varying business needs, driven by factors like data volume, speed requirements, and processing complexity.

-

Batch ingestion

Involves collecting and transferring large volumes of data at scheduled intervals.

-

Scheduled transfers

Data is processed at set times (e.g., nightly), reducing system load during peak hours.

-

Bulk processing

Entire datasets are moved in chunks, ideal for historical data updates.

-

-

Real-time ingestion

Captures and processes data moments after it is generated, minimizing latency.

-

Event-driven

Data flows in response to events, such as user clicks or transactions.

-

Low latency

Optimized for near-instant availability of fresh data.

-

-

Streaming ingestion

Maintains a continuous feed of data, allowing ongoing processing and analysis.

-

Continuous data flow

Data moves in small, incremental chunks to support live dashboards.

-

Streaming platforms

Common tools include Apache Kafka, Amazon Kinesis, and Azure Event Hubs.

-

Common Data Sources

Analytics platforms ingest data from varied origins; each source may require different ingestion configurations.

-

Web analytics

Tracks user interactions on websites via JavaScript snippets or pixel tags.

-

Javascript snippets

Small scripts embedded in pages that capture pageviews, clicks, and form submissions.

-

Server logs

Web server logs record every request, useful for backfill or advanced analysis.

-

-

Mobile applications

SDKs integrated into apps capture in-app events and user behavior.

-

Databases and warehouses

Replicating tables from SQL/NoSQL databases using connectors or ETL tools.

-

Apis

Pulls data from third-party services like social media, CRM, or payment gateways.

SaaS Tools and Integration Examples

Popular analytics platforms provide built-in ingestion pipelines to simplify setup. Below are examples using PlainSignal and GA4.

-

PlainSignal integration

PlainSignal offers a cookie-free approach to web analytics. Install via a simple JavaScript tag:

<link rel="preconnect" href="//eu.plainsignal.com/" crossorigin /> <script defer data-do="yourwebsitedomain.com" data-id="0GQV1xmtzQQ" data-api="//eu.plainsignal.com" src="//cdn.plainsignal.com/plainsignal-min.js"></script> -

Google analytics 4 (GA4) integration

GA4 uses the gtag.js library to ingest events:

<!-- GA4 tracking snippet --> <script async src="https://www.googletagmanager.com/gtag/js?id=G-XXXXXXXXXX"></script> <script> window.dataLayer = window.dataLayer || []; function gtag(){dataLayer.push(arguments);} gtag('js', new Date()); gtag('config', 'G-XXXXXXXXXX'); </script>

Best Practices for Data Ingestion

Adhering to best practices ensures reliability, performance, and data quality across ingestion workflows.

-

Validate data at ingestion

Implement schema checks and data type validation to catch issues early.

-

Design for scalability

Choose architectures that can handle growing data volumes, such as partitioning or autoscaling pipelines.

-

Implement monitoring and alerts

Use logging, metrics, and alerting to detect failures, latency spikes, or data backlog.

Common Challenges and Solutions

Awareness of typical ingestion pitfalls and how to address them can save time and resources.

-

Data silos

Isolated datasets hinder analysis. Solution: centralize data in a unified repository.

-

Latency and timeliness

Slow ingestion impacts real-time insights. Solution: adopt streaming or micro-batch frameworks.

-

Data overload

Excessive data volume can overwhelm systems. Solution: implement filtering, sampling, or retention policies.